B2BaCEO: August 2023 Newsletter

I share anecdotal learnings and unpack three industry reports on the state of generative AI adoption. I plug my latest post on the enterprise LLM workflow. I introduce our new EIR, Mahmoud Arram.

08.18.2023 | By: Ashu Garg

August AI Roundup

August may be a month for vacations, yet the momentum around generative AI isn’t taking a break. My recent conversations with founders make one thing clear: enterprise demand for generative AI is real, and it’s growing. Sales push is giving way to demand pull, as buyers equipped with new budgets for generative AI initiatives seek enterprise-grade solutions.

At the same time, evidence of generative AI’s power to create meaningful business value is mounting. Consider a recent experiment conducted by one of my portfolio companies, Turing. They split their data science and engineering teams into three groups. The first group began using Github Copilot for the first time, the second group operated without it, and the third group continued their existing use of the tool. Over the course of five weeks, the first group saw a 38% bump in productivity, while the third group enjoyed a 9% increase. These results suggest that the benefits of generative AI tools aren’t just immediate: they grow over time. As smarter coding assistants that can understand and debug local codebases enter the market, expect ever larger gains.

A new study from The Economist sheds further light on the enterprise AI landscape. The study evaluated S&P 500 companies on several AI metrics, including AI mentions in patents and earnings calls, AI acquisitions, and AI-specific job listings, from January to June of this year. While tech giants like Nvidia and Amazon unsurprisingly dominated, the real headline lies in how AI expertise is permeating other data-rich sectors like insurance, healthcare, media, and retail. These industries are capitalizing on their unique data (think loan filings, patient records, digital viewing habits, and online shopping trends) and investing heavily in AI. Combined, they make up over half of the S&P.

Yet, for all the talk and enthusiasm, we’re just scratching the surface of what generative AI can achieve in production. Venture outside of big tech, and most companies are still in the proof of concept phase with small pilots and demos. The path from idea to implementation in the enterprise world can take anywhere from three to 18 months. Factor in the as-yet-unsolved challenges that generative AI presents, from data security to accuracy concerns, and we’re looking at a year, or maybe even two, before we see its full potential.

To get a clearer picture, I turned to three reports released this month: Bessemer’s “Cloud 100,” McKinsey’s 2023 “State of AI” report, and Databricks’ “CIO Perspectives on Generative AI.” Let’s explore each one.

Bessemer’s atmospheric view

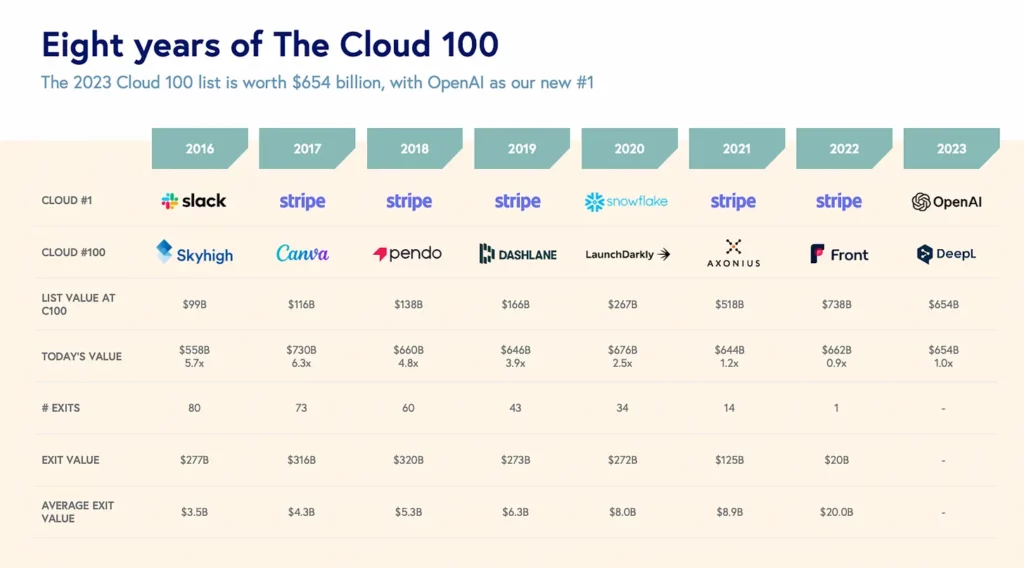

This year’s Cloud 100 marked the introduction of AI as a dedicated category, with five AI-native companies making their debuts: OpenAI, Anthropic, Midjourney, Hugging Face, and DeepL. Built from the ground up around AI, they command a total valuation of $36 billion. (This number excludes Midjourney, which has not conducted a publicly reported financing.) OpenAI clinched the top spot, nudging out Stripe, which has held the lead for five of the past six years. In second place is one of my personal investments, Databricks, bolstered by its ambition to become an end-to-end platform for enterprise AI: a bet that I explored in last month’s editorial. A striking 70% of companies on the list have already embedded AI, while 55% have unveiled a generative AI product or feature in the past eight months

Other parts of the report are a bit more…overcast. For the first time since its inception, the list’s combined equity value shrunk by 11% to $654 billion. The average growth rate of Cloud 100 companies also saw a decline, dipping to 55% from 100% in 2022. With IPOs at a standstill, Figma was the only company to exit from the 2022 list, thanks to a $20 billion acquisition bid by Adobe, which remains in progress due to regulatory delays.

McKinsey’s global take

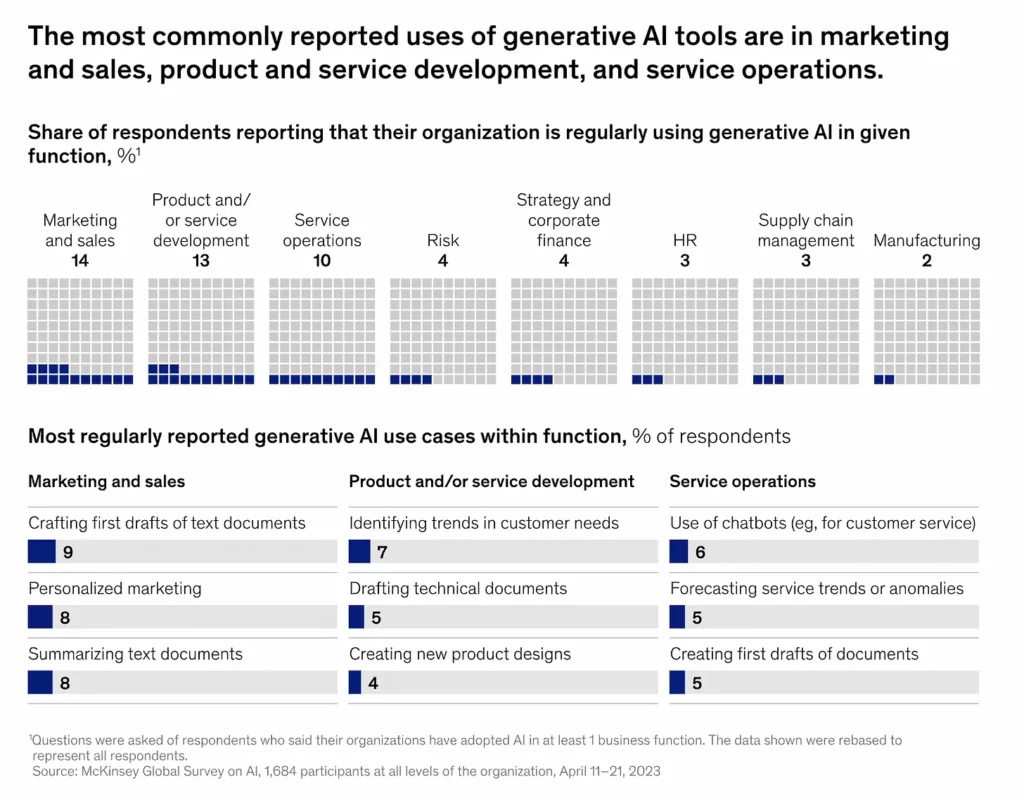

This year, McKinsey’s annual “State of AI” report makes a hard sell for generative AI. Fielding responses from over 1,600 leaders at all levels of the enterprise, their survey found that around one-third of companies have adopted AI in at least one business function. While the exact percentage is not disclosed, marketing and sales departments lead the way, with 14% tapping into generative AI. They’re closely followed by product development at 13% and service operations (think customer care and back office support) at 10%. These are the same functions where traditional AI use is also most common. For other departments, such as risk, strategy and finance, and HR, adoption of generative AI dips to 4% or lower. This suggests that there’s ample room for this technology to expand its reach

Interestingly, generative AI doesn’t seem to be driving overall enterprise AI adoption. The latter sits at a steady 55%, unchanged since 2021. Only 31% of organizations have integrated AI across more than two business functions, while a mere 23% credited AI for at least 5% of their EBIT last year. When we zero in on the frontrunners, the majority are focused on generative AI’s potential to catalyze revenue and product growth, rather than its ability to cut costs.

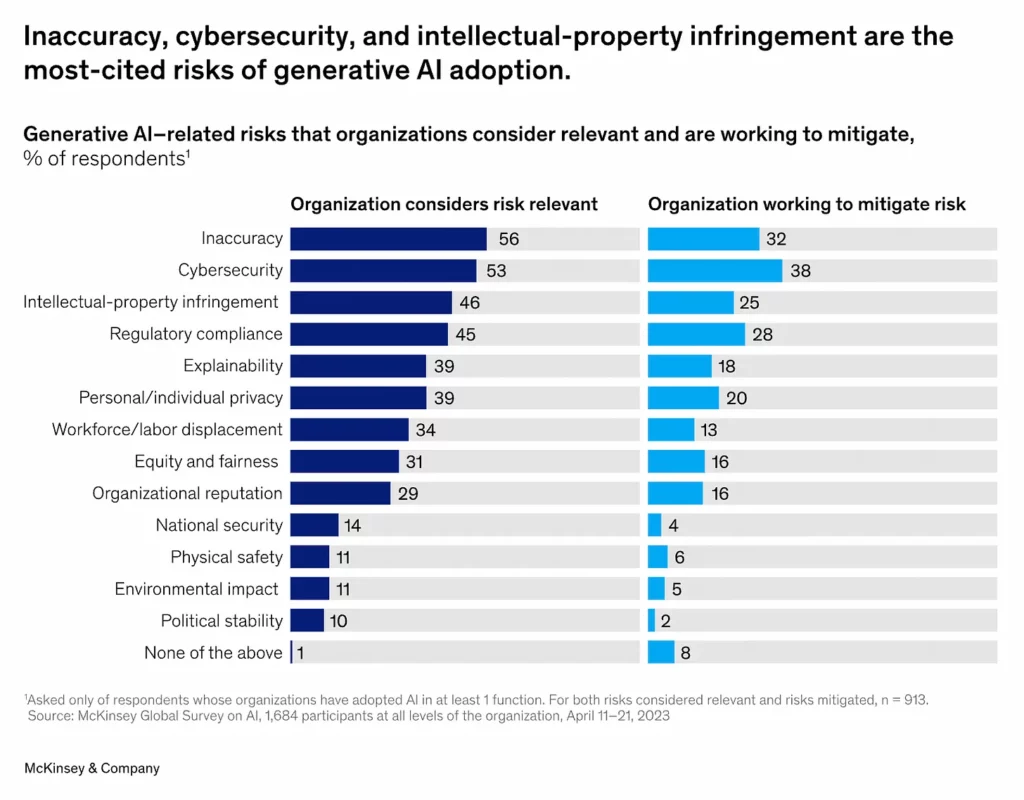

Across the board, the risks of generative AI remain largely unaddressed. Only 21% of companies that have adopted generative AI have established guardrails around its use at work. That’s despite broad-based recognition of its potential downsides, from inaccuracy, cybersecurity, and IP infringement to regulatory compliance, explainability, and data privacy. There’s a significant gap between awareness of these risks and the creation of policies, systems, and processes to actively address them, as the charts below demonstrate. Without robust solutions to address these hurdles, enterprise adoption of generative AI may find itself stalled in the pilot stage. This could be a promising problem space for startups to play, as several of our portfolio companies, like Anomalo, Arize, Fortanix, and Skyflow are already showing

Databricks weighs in

Grounded in seven in-depth interviews with senior executives and experts, Databricks’s report offers a different view. In 2022, Databricks found that, while 94% of organizations were leveraging AI (a much higher number than The Economist reports, likely due to Databricks’ tech-biased sample), only 14% had ambitions to achieve “enterprise-wide AI” (defined as the integration of AI in at least five core business functions) by 2025. Based on insights gained from their recent series of interviews, Databricks is optimistic that generative AI will change this narrative. With its ability to make sense of unstructured data and complement a wide range of language- and knowledge-based tasks, generative AI promises to make enterprise-wide AI a much more common goal.

The other top-line message of Databricks’ interview study? Generative AI isn’t just about models and algorithms: it’s also a data engineering challenge. Successfully implementing generative AI demands a full-stack data strategy, including building efficient data pipelines, planning for scalability with distributed data storage and parallel processing, and ensuring security and compliance. To truly enable enterprise-wide adoption, this infrastructure must also allow non-technical users to easily interact with data via intuitive, natural-language-based interfaces. Given Databricks’ expertise in all things enterprise data, together with its recent acquisition of MosaicML, this messaging feels spot on.

Summing up

While we’ve yet to see generative AI appear in production at scale, it’s clear that the benefits of this technology are real and that it’s here to stay. As the pace of innovation continues to accelerate, and foundation models become more performant at a broader range of tasks, CEOs would be well advised to take this technology very seriously. Five years from now, companies that haven’t adopted generative could find themselves left in the digital dust.

Adopting LLMs in the Enterprise: A Three-Step Framework

To gain even more clarity about the specifics of adopting generative AI, the enterprise team at Foundation has spent the past several months connecting with builders, technologists, and CXOs across both startups and leading F500 companies. From conversations, we created a three-step framework for integrating LLMs into enterprise products and workflows, which my partner, Jaya Gupta, and I share in our latest blog post. Below is a quick summary. Read the post for the full story

Step 1: Identify your use case. NLP tasks that keep humans in the loop, from customer service and sales to marketing and product development, are good places to start.

Step 2: Choose your model

- Option 1 – DIY: Train from scratch. This offers total control but is costly.

- Option 2 – Off-the-Shelf: Buy ready-made. This is convenient but affords little room for customization.

- Option 3 – Middle Ground: Adapt pre-trained LLM. Think of this as a balance between options 1 and 2.

If you choose Option 2, the next decision is proprietary versus open-source.

- Proprietary models offer high performance and managed infrastructure, but they are expensive to run in production.

- Open-source models are transparent and flexible and offer full data control, yet their performance lags behind that of their closed-source counterparts.

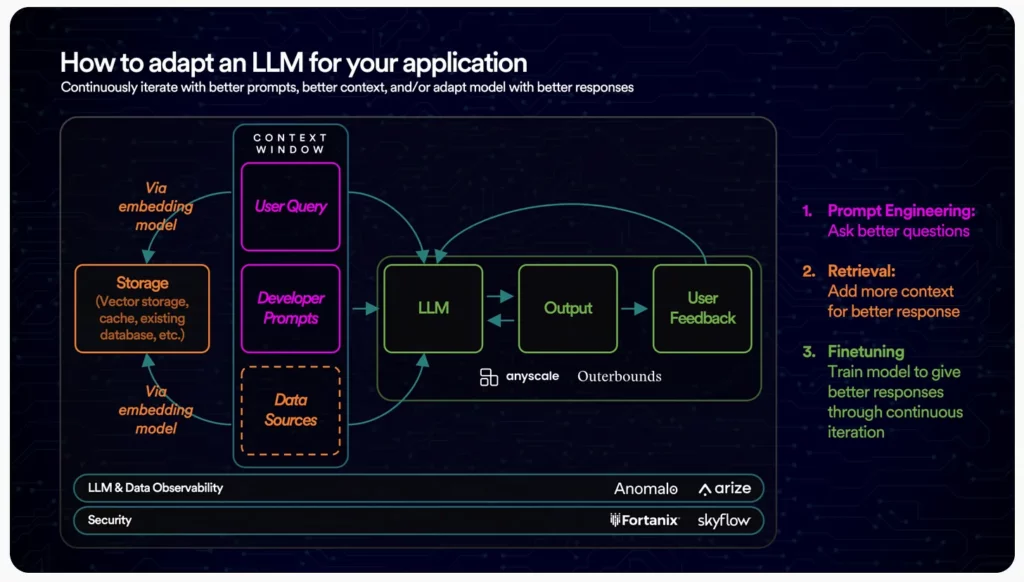

Step 3: Adapt and improve your LLM for your use case. Here are the most common 3 strategies:

- 1. Prompt engineering involves refining and optimizing input prompts to guide your LLM.

- 2. Context retrieval gives your LLM a “memory bank” by connecting it to external data sources.

- 3. Fine-tuning tailors your LLM to a given task using a domain-specific &/or proprietary dataset.

Meet our new EIR, Mahmoud Arram!

I’m thrilled to introduce Mahmoud Arram, our newest entrepreneur in residence! Mahmoud comes to Foundation’s enterprise team from Bluecore, an innovative multi-channel marketing personalization platform for e-commerce that he co-founded. Under his decade-long leadership as CTO, Bluecore partnered with leading retailers including Nike, Gap, Sephora, Express, NoBull, and Coach and raised over $230 million in venture capital.

Born in Egypt, Mahmoud came to the U.S. to earn his B.S. in computer and electrical engineering and decided to make it his home. Today, he’s based in Park Slope, Brooklyn, with his wife and three children. He credits FaceTime with helping him keep up with his siblings in Cairo, Germany, Canada, and Indonesia.

At Foundation, Mahmoud will be exploring generative AI applications for enterprise use cases. He’s specifically interested in code and architecture generation, few-shot learning for systems integration, assistive debugging, and auto comprehension of observability data.

Welcome, Mahmoud!

Portco Spotlight: Arcade

Telling the story of your product is hard, and today’s consumers want you to show them your product, not just talk about it. Arcade is an interactive demo platform that enables teams to create effortlessly beautiful demos in minutes. Designed for ease of use, Arcade removes the creative and technical barriers often felt by teams wanting to showcase their product on a website, content, or social media. You can also leverage Arcade’s generative AI tools to write relevant copy and even narrate the demo with synthetic audio. Product-forward companies like Atlassian and Carta use Arcade to tell better product stories. Check it out here!

Published on 01.31.2023

Written by Foundation Capital