B2BaCEO Newsletter: June 2023

I share takeaways from FC’s inaugural Generative AI “Unconference.” I announce our Generative AI 25 list! I share my latest pod with AI pioneer Matei Zaharia. I debrief our event at AGI House.

06.10.2023 | By: Ashu Garg

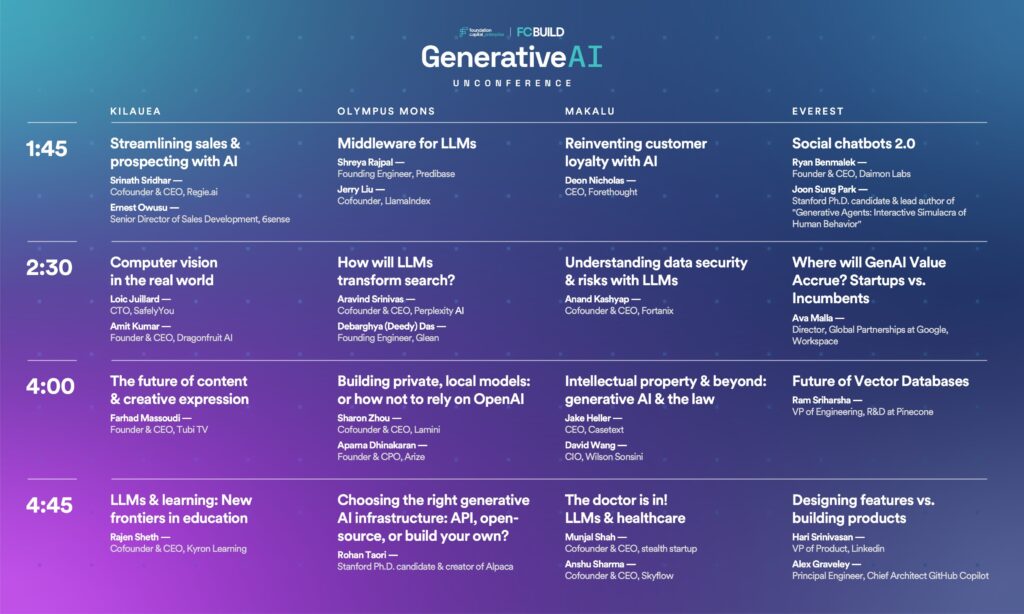

Last month, Foundation hosted our inaugural, invite-only Generative AI “Unconference” in San Francisco. We convened fifty of the top (human!) minds in AI, including founders, researchers, and industry experts, for an afternoon of small group discussions about building successful AI companies. All sessions were off the record to promote the free exchange of ideas. So, while I can’t share every juicy detail, what I can offer is the full schedule and some enticing photos, along with our team’s top five takeaways.

- AI natives have key advantages over AI incumbents

In AI, as in other technology waves, every aspiring founder (and investor!) wants to know: Will incumbents acquire innovation before startups can acquire distribution? Incumbents benefit from scale, distribution, and data; startups can counter with business model innovation, agility, and speed—which, with today’s supersonic pace of product evolution, may prove more strategic than ever. To win, startups will have to lean into their strength of quickly experimenting and shipping. Other strategies for startups include focusing on a specific vertical, building network effects, and bootstrapping data moats, which can deepen over time through product usage.

The key distinction here lies between being an “AI integrator” (the incumbents’ route) and being an “AI native” (unique to startups). Incumbents can simply “add AI” to existing product suites, which places startups at a distribution disadvantage. By contrast, being “AI native” means designing products from the ground up with AI at their core. Powered by net-new capabilities that make them the proverbial “10x better,” AI-native versions of legacy products can capture meaningful market share from (or even unseat!) incumbents. The challenge for startups is determining where these de novo, AI-native product opportunities lie. Because startups thrive on action and speed, the best way for founders to figure this out might simply be to jump in and try! - In AI, the old rules of building software applications still apply

Another hot-button question at the Unconference: How can builders add value around foundation models? Does the value lie in domain-specific data and customizations? Does it accrue through the product experience and serving logic built around the model? Are there other insertion points that founders should consider?

While foundation models will likely commoditize in the future, for now, model choice matters. From there, an AI product’s value depends on the architecture that developers build around that model. This includes technical decisions like prompts (including how their outputs are chained to both each other and external systems and tools), embeddings and their storage and retrieval mechanisms, context window management, and intuitive UX design that guides users in their product journeys.

It’s important to remember that generative AI is a tool, not a cure-all. The time-tested rules of product development still hold true. This means that every startup must begin in the old-fashioned way: by identifying a pressing problem among a target user base. Once a founder fully understands this problem (ideally, by talking to many of these target users!), the next step is to determine if and why generative AI is the best tool to tackle it. As in previous waves of product innovation, the best AI-native products will solve this problem from beginning to end. - UX matters just as much, if not more, than models

Which brings me to my next point: generative AI is not just a technology, it’s also a new way of engaging with technology. LLMs enable forms of human-computer-AI interaction that were previously unimaginable. By allowing us to express ourselves to computers in fluent, natural language, they make our current idiom of clicking, swiping, and scrolling seem antiquated in comparison.

This shift in how we interact with technology creates outsized opportunities for startups (the “AI natives”) while posing a potentially existential threat to incumbents (the “AI integrators”). Consider ChatGPT: far and away the fastest-growing startup in history. In essence, it’s two things: an LLM (GPT-3.5, followed by GPT-4) with an intuitive interface on top. Aligning GPT-3 to follow human instructions through supervised learning and human feedback was an important first step. Yet, alongside OpenAI’s technical breakthroughs, it was an interface innovation that made generative AI accessible and useful to everyday users.

Digital interfaces are our portals to the internet—and, consequently, to the world. For years, Google’s search box has mediated (and, in some sense, determined) our experience of the web. It’s widely accepted in tech circles that generative AI will replace the traditional search box with a more natural, conversational interface. The shift is underway from “search engines” to what Aravind Srinivas, cofounder and CEO of Perplexity and a session leader at the Unconference, calls “answer engines.”

Today, our interaction with Google also resembles a conversation, albeit a very clunky one. We ask Google a question, and it responds with a series of blue links. We click on a link, which sends another message to Google, as does the time we spend on the page, our behavior on that page, and so on. LLMs can transform this awkward conversation into a fluent, natural-language dialogue. This idiom—not “Google-ese”—promises to become the new interface not simply for search, but for all our interactions with computers.

The shift gives many reasons for optimism. Answer engines powered by LLMs could give rise to a more aligned internet that’s architected around users, not advertisers. In this new paradigm, users pay for enhanced productivity and knowledge, rather than having their attention auctioned to the highest bidder. As conversational interfaces gain traction, existing modes of internet distribution could be upended, dealing a further blow to incumbents. Who needs to visit Amazon.com to order paper towels when you can simply ask your AI?

The chat box is just the beginning of where AI-first interfaces will go. Expect much more innovation on this front, including chatbots that are voice first, equipped with tools, and capable of asking clarifying questions, as well as generative agents that can simulate human social behavior with striking precision. The consensus among Unconference participants was that we’re just one to two UX improvements away from a world where AI mediates all human communication. This interface revolution puts immense value, previously controlled by big tech, up for grabs. - It’s go-go days for open source AI

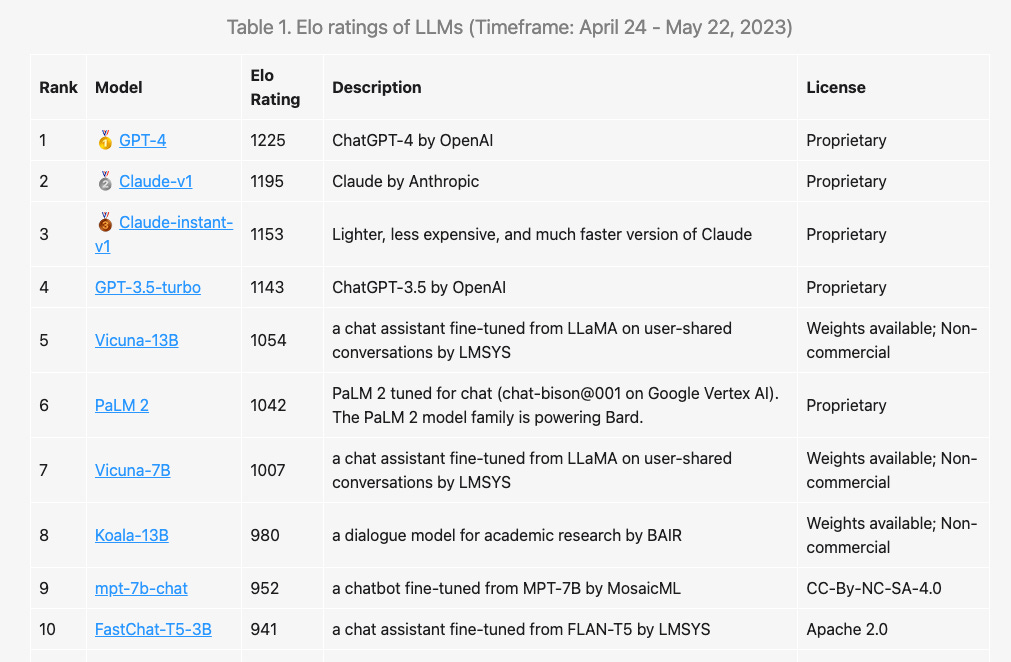

See table below for top ten most performant LLMs, evenly split between proprietary and open source.

Spring 2023 may be remembered as AI’s “Android moment.” Meta AI’s LLaMA, a family of research-use models whose performance rivals that of GPT-3, was the opening salvo. Then came Stanford’s Alpaca, an affordable, instruction-tuned version of LLaMA. This spawned a flock of herbivorous offspring, including Vicuna, Dolly (courtesy of Databricks), Koala, and Lamini, along with an entire family of open-source GPT-3 models from Cerebras. Today, Vicuna ranks fifth on LMSYS Org’s performance leaderboard behind proprietary offerings from OpenAI and Anthropic.

So far, much of the progress in deep learning has been in open source. Many of our Unconference participants—including one of our keynote speakers, Robert Nishihara, cofounder and CEO of Anyscale—are betting that this will continue to be the case. As Robert sees it, today, model quality and performance are the top considerations. Soon, we’ll reach the point where all the models are “good enough” to power most applications. The differentiation between open source and proprietary will then shift from model performance to other factors, like cost, latency, privacy, ease of use and customization, explainability, and so on. Many companies will navigate these tradeoffs by pursuing hybrid architectures.

Looking forward, open source will continue to improve through a combination of better base models, better tuning data, and human feedback. Proprietary LLMs will also advance in parallel. But more and more general use cases—one of our discussion leaders pegged it at 99% plus—will be covered by open source models, with proprietary models reserved for tail cases. - Small is the new big

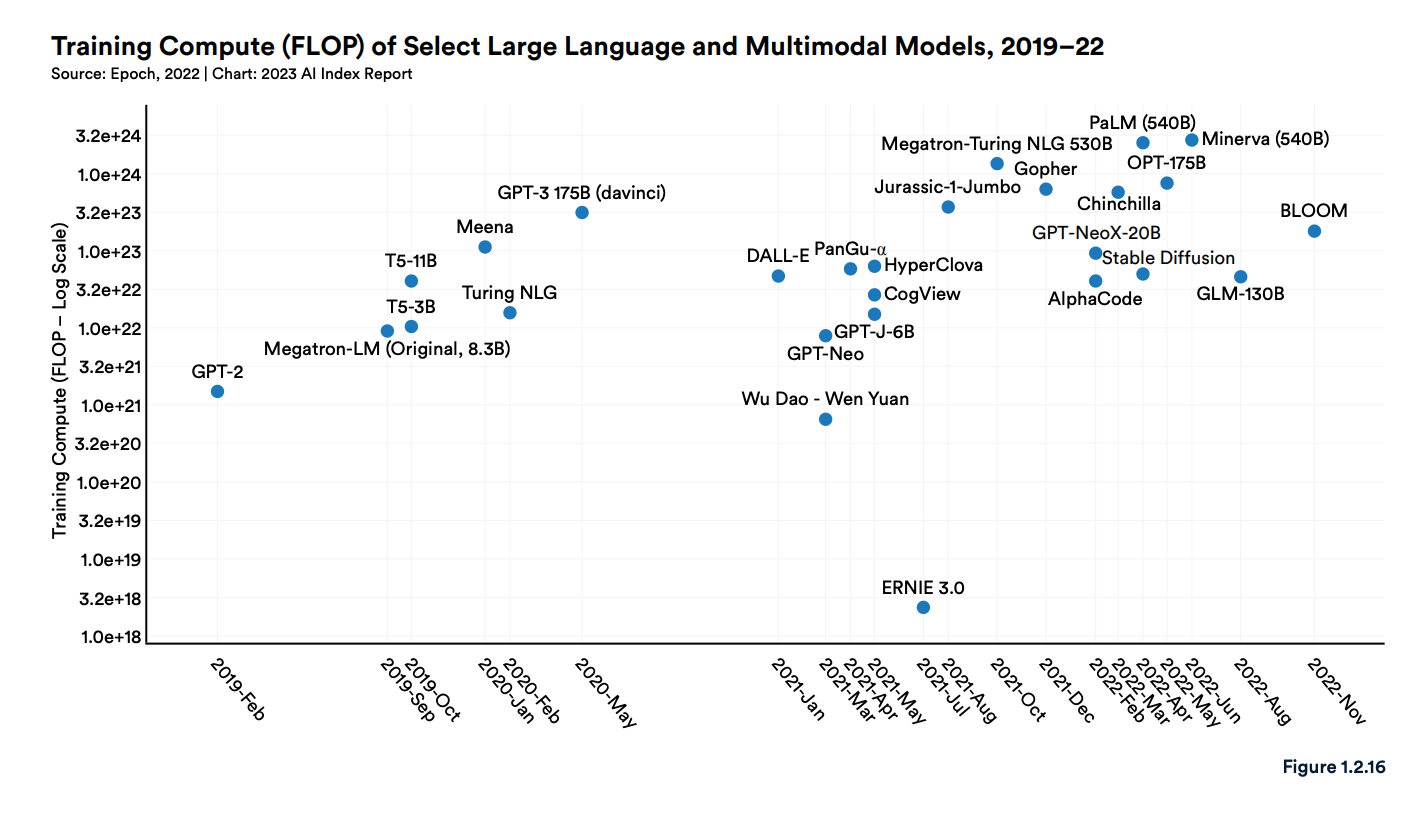

Bigger models and more data have long been the go-to ingredients for advancements in AI. Yet, as our second keynote speaker, Sean Lie, Founder and Chief Hardware Architect at Cerebras, relayed, we’re nearing a point of diminishing returns for simply supersizing models. Beyond a certain threshold, more parameters do not necessarily equate to better performance. Giant models waste valuable computational resources, causing costs for training and use to skyrocket. What’s more, we’re approaching the limits of what GPUs (even those carefully designed to train AI workloads) can support.Sam Altman echoed Sean’s stance in a talk at MIT last month: “I think we’re at the end of the era where it’s going to be these, like, giant, giant models. We’ll make them better in other ways.”

Enter small models. Here, efficiency is the new mantra, with better curated training data, next-generation training methods, and more aggressive forms of pruning, quantization, distillation, and low-rank adaptation all playing enabling roles. Together, these techniques have boosted model quality while shrinking model size. GPT-4 cost over $100 million to train; Databricks’ Dolly took $30 and an afternoon. Sean further enlightened us about Cerebras’s GPT-3 models. Optimized for compute using the Chinchilla formula, Cerebras’ models boast lower training times, costs, and energy consumption than any publicly available models to date.

These compact, compute-efficient models are proving that they can match, and even surpass, their larger counterparts on specific tasks. This could mark a new paradigm, where larger, general models serve as “routers” to downstream, smaller models that are fine-tuned for specific use cases. Instead of relying on one mammoth model to compress the world’s knowledge, imagine a coordinated network of smaller models, each excelling at a particular task. Here, the larger model functions as a reasoning and decision-making tool rather than a database, directing tasks to the most suitable AI or non-AI system. Picture the large, general model as your primary care doctor, who refers you to a specialist when needed.

This trend could be game changing for startups. Maintaining models’ quality while trimming their size promises to further democratize AI, leading to a more balanced distribution of AI power and value capture. Beyond better economics and speed, these leaner models offer the security of on-premise and even on-device operation. Imagine a future where your personal AI and the data that fuels live on your computer, ensuring that your “secrets” remain safe. My advice: watch this space closely for developments in the coming months.

Announcing the Foundation Capital Generative AI 25!

Today, we launched an open call for the “Foundation Capital Generative AI 25”: our ranking of the 25 most influential, venture-backed, for-profit companies that are defining the generative AI field.

To curate the list, we’re assembling a panel of experts from across academia and industry, including leading computer science professors, researchers from top foundation-model providers, and Fortune 500 CXOs. We’ll be considering a range of factors, including revenues, growth, culture, and influence. We’ll also be pairing submissions with third-party data to ensure that the ranking is both impartial and comprehensive.

Full Speed Ahead on AI with Matei Zaharia

My guest this month is AI luminary Matei Zaharia. Matei is the CTO and cofounder of Databricks and a professor of computer science at Stanford University. Our conversation covers a lot of ground—most of it technical, all of it very relevant for anyone who’s serious about building with AI.

We start with a discussion of Databricks’ early days: how this sprightly startup carved out its niche in a market dominated by incumbents. From there, we dive headlong into AI. Matei breaks down the most common challenges that enterprises encounter when attempting to adopt AI. He shares tips for how startups can best deploy foundation models. Plus, he ponders what the most transformative new use cases for AI will be over the next two years. Matei also gives us a behind-the-scenes glimpse of the cutting-edge ML research that he’s working on with his team at Stanford. Be sure to listen until the end for a fascinating exchange on the future of AI beyond LLMs! Listen in here.

Lessons for Generative AI Builders at AGI House

Continuing on my new favorite topic, we recently hosted a fireside chat at AGI House about the new world of generative AI applications. The panel starred our very own Joanne Chen alongside Vinayak Ramesh (cofounder and CEO of Ikigai), George Netscher (CEO of SafelyYou), and Professor Trevor Darrell (founding co-director at the Berkeley Artificial Intelligence Research, or BAIR, Lab). Before a standing-room crowd, they demystified the buzzwords—“data as a moat,” “feedback loops,” “GTM strategies/distribution,” “fine-tuning,” “domain expertise,” and so on—by exploring what each concept means, concretely, for early-stage builders. Key discussion questions included:

- How do you go from 0 to 1?

- How can generative AI startups compete with incumbents that are rapidly integrating this technology?

- How can startups build moats at the generative AI application layer? Do moats even matter?

After the panel, there were ample drinks and even more ample AI shop talk. Our heartfelt thanks to AGI house for making this event possible!

PMF >>> Hype

My friend and fellow investor, Ramy Adeeb, General Partner at 1984 Ventures, recently weighed in on the implications of the AI hype cycle for early-stage venture on his Substack, “Ramy’s Newsletter.” I highly recommend the full article! Here’s the teaser:

While hype helps spur innovation by attracting capital, talent, and customer awareness, it quickly fizzles when reality fails to match expectations. Many of the most successful startups of the past two decades (Google, Facebook, Uber, Airbnb, and Figma) began by tackling opportunities (search, social, the sharing economy, and WebSockets) that were unpopular at the time. These less-obvious companies emerged and thrived because they met a clear and urgent market need. When it comes to generating substantial returns, product-market-fit beats hype every time.

Sign up for Ramy’s Newsletter here.

Startup Spotlight: Tingono

Looking to improve cross-sells, upsells, and renewals while fending off downgrades and churn? Look no further than Tingono! This startup is on a mission to supercharge customer success teams’ net revenue retention using the power of generative AI. To start, Tingono gathers all your customer data into a single, user-friendly interface. Get a real-time snapshot of how customers are interacting with your product, keep tabs on the volume of support requests, track spending patterns, and much more! Then watch as Tingono transforms this wealth of customer information into actionable insights using generative AI. The results allow customer success teams to proactively capture expansion opportunities and prevent churn risks before they arise. Book a demo or try it out for yourself!

Published on 06.10.2023

Written by Ashu Garg