The State of Enterprise AI Adoption

I explore two major AI reports and weigh in on a leaked Google memo. I offer three takeaways from this year’s RSA conference. I share my conversation with Microsoft’s Chief Strategy Officer.

05.09.2023 | By: Ashu Garg

First off, big news! B2BaCEO has relaunched on Substack. If you’re receiving this newsletter, it means you’re either an existing subscriber, or you’ve had a direct connection with me or Foundation Capital in the past.

From here on out, B2BaCEO will be delivered to you every month via Substack. This move positions B2BaCEO for the future and arms it with new tools, like recommendations, discussion threads, and comments. Stay tuned for a steady beat of new content, and thanks for reading!

Here’s What I’m Thinking

As we venture into May, generative AI continues to improve at breakneck speed. In the past 45 days alone, we’ve witnessed the emergence of GPT-4, Auto-GPT, Midjourney v5, Runway Gen2, a viral AI Drake song, and the wiring of ChatGPT into robots. These advances are fueling one of the most rapid technology adoption cycles we’ve ever seen. Big tech players like Microsoft and Google have integrated generative AI into their core offerings, while popular B2B SaaS providers, such as Atlassian, Hubspot, Notion, Salesforce, and Zoom, are following suit. Nearly every startup product manager is adding generative AI-driven products and features to their roadmaps.

To help sort signal from noise, I turned to two reports: the annual AI Index, published by the Stanford Institute for Human-Centered Artificial Intelligence, and the 2023 AI Readiness Report from Scale, which draws on the results of a survey of 1,600+ executives and ML practitioners. Here are my top three takeaways from the reports, followed by my own two cents, which were informed by a leaked Google memo:

- AI adoption spans a wide range of use cases and industries.

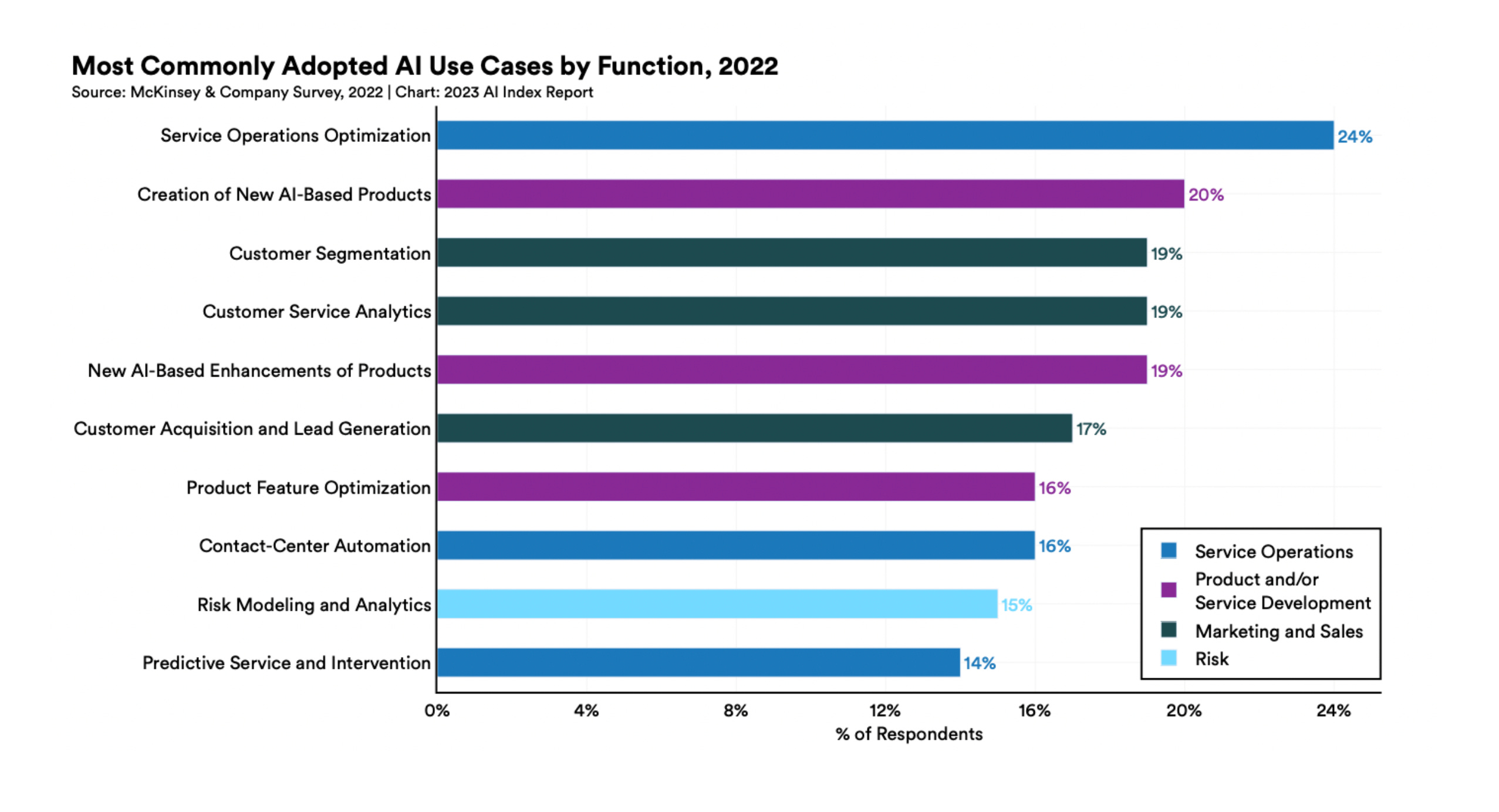

Since 2018, enterprises have doubled their average number of AI-powered use cases. In 2022, the top AI use case was service operations optimization, followed by the creation of new AI-based products, customer segmentation, customer service analytics, and AI-based product enhancements.

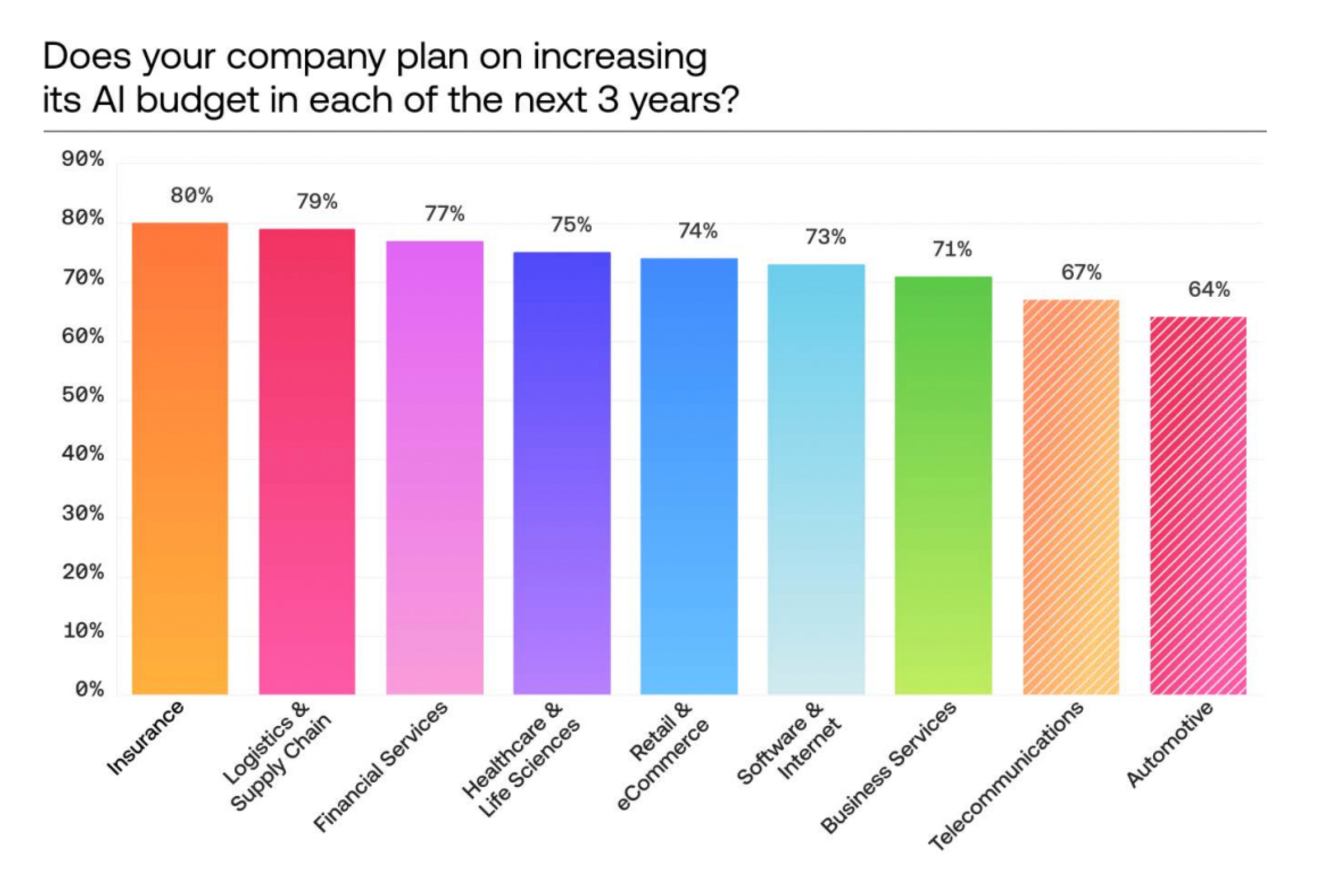

From an industry perspective, insurance, logistics and supply chain, and financial services demonstrate the highest enthusiasm for AI adoption. Top use cases within these industries include claims processing, fraud detection, and risk assessment/underwriting (insurance); inventory management, demand forecasting/planning, route optimization, and autonomous vehicles (logistics and supply chain); and investment research, fraud detection/AML, and customer-facing process automation (financial services).

- Generative AI has piqued enterprise interest, but adoption remains largely experimental.

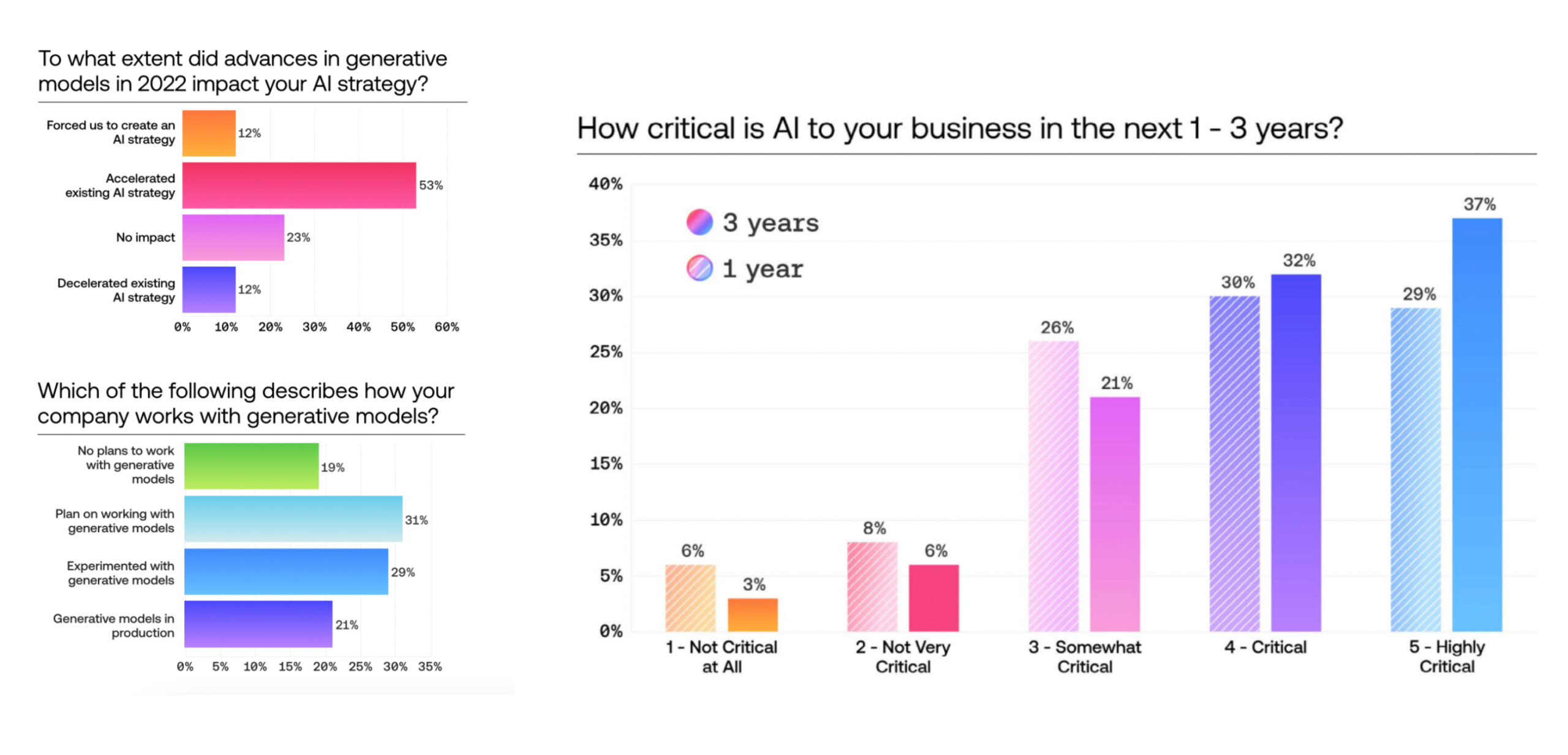

Generative AI has captured executives’ attention, with 65% creating new AI plans or accelerating their existing AI strategy as a result. Yet, while a vast majority (81%) are either actively working with generative models or plan to do so soon, the use of generative models in production remains limited. Still, generative Ai’s impressive and ever-improving capabilities have convinced executives that AI is essential for their businesses’ future success. Within the next year, 59% consider AI as critical or highly critical: a number that rises to 69% over a three-year time horizon.

- Open-source models are emerging quickly in the generative AI ecosystem and will likely offer comparable performance for enterprise use cases in the near future.

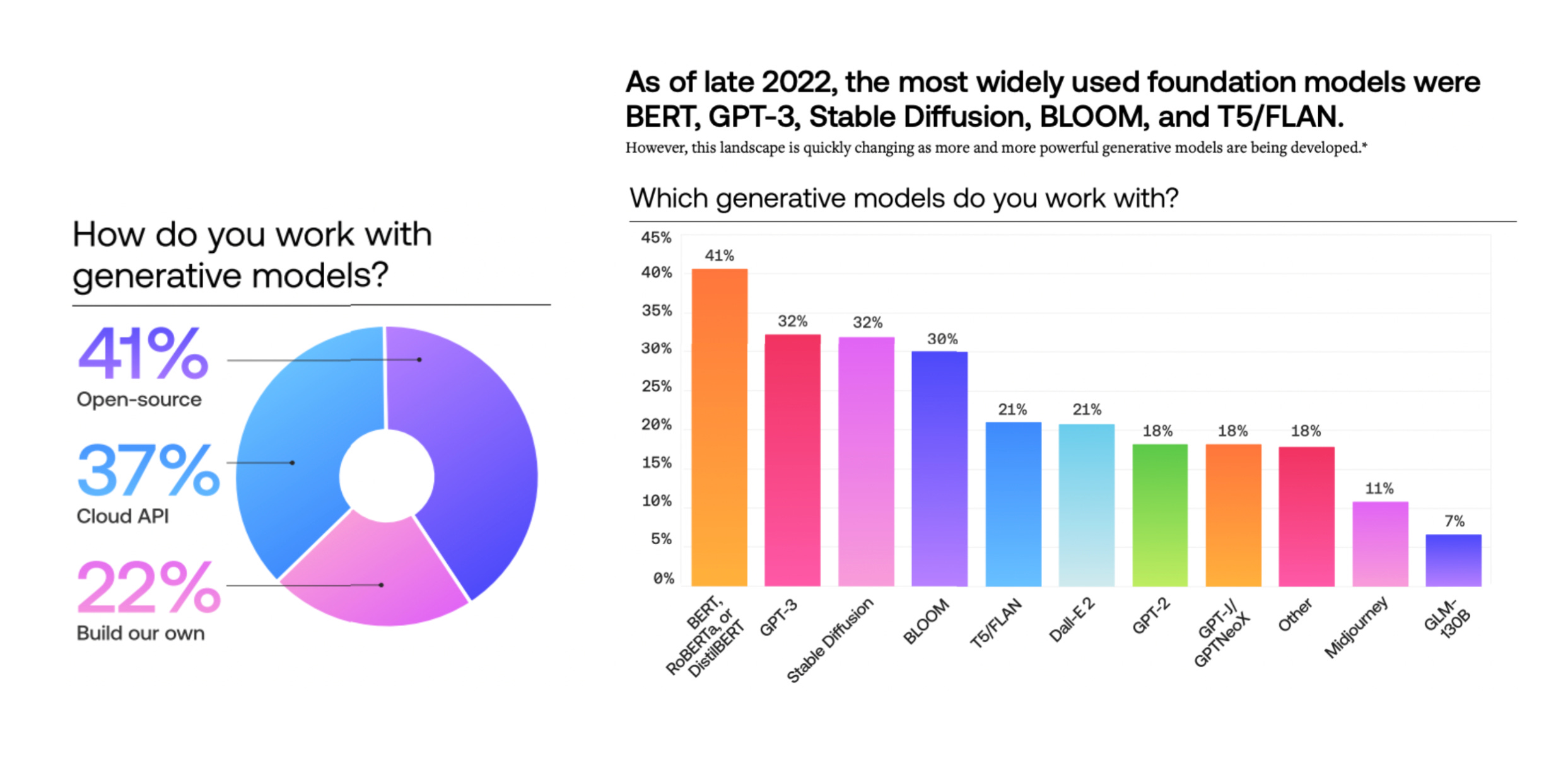

According to Scale’s survey, demand for open-source generative models is strong: 41% of enterprise respondents are using them. Cloud API generative models are a close second, with 37% of respondents relying on them. Of the top five most widely adopted foundation models in 2022, four (BERT, Stable Diffusion, BLOOM, and T5/FLAN) are open source.

My Two Cents

Given all the buzz, it’s no surprise that enterprise enthusiasm for AI is growing. Generative AI, specifically, will likely have the biggest impact on the way that enterprises develop NLP systems. Indeed, for businesses that already rely on NLP, integrating LLMs is a natural next step. Industries with text-centric use cases, including document processing, sentiment analysis, and chatbots, will be among LLMs’ earliest adopters. On the other hand, in cases where products do not inherently involve language or NLP, we’ll see more experimentation, as companies explore how LLMs can improve user experiences and layer on language as a more intuitive interface to their products.

This raises a follow-on question: What problems are enterprises seeking to solve with AI today, and how have the gains in generative AI expanded the scope of these problems? As LLMs reset the bar for best-in-class experiences across a range of tasks, they also introduce a tangle of challenges around integration with existing systems, regulatory compliance, explainability, data privacy, and security. Rather than build LLM systems internally, many enterprises may choose to adopt them through vendors: a trend that, if realized, promises to create significant opportunities for startups.

While the toast of the town is currently OpenAI’s GPT-4, I expect open-source models to play an increasingly important role in the AI ecosystem, and perhaps even dominate. In recent months, we’ve seen the performance gap between proprietary models and open-source alternatives rapidly narrow. Consider Cerebras-GPT, which significantly outperforms existing GPT-3 clones, and Databricks’ Dolly 2.0, built using the open-source model GPT-J-6B, which achieves ChatGPT-like performance in instruction-following tasks.

Initially, the release of ChatGPT was compared to both the Netscape and iPhone moments. A leaked internal document, written by a researcher at Google, hints that OpenAI may be more likely to fade like Netscape than enjoy the iPhone’s longevity. Titled “We Have No Moat… And neither does OpenAI,” the memo argues that the tight control exerted by Google and OpenAI over their models is becoming less and less effective. Open-source models are proving to be faster, more customizable, more private, and markedly less expensive than their proprietary counterparts. “In the end,” the author concludes, “OpenAI doesn’t matter.” Ouch.

Ultimately, the debate between open and closed models may result in a “both-and” rather than an “either-or” scenario. Combining multiple models has long been a standard M.O. among ML practitioners. Similarly, enterprises are likely to adopt a mix of proprietary and open-source foundation models as they optimize for variables like accuracy, latency, cost, and control over proprietary data.

Learnings From RSA

This year, RSA, the world’s leading cybersecurity conference, was back with a bang. Over 40,000 people attended: a more than 50% increase from last year. To mark the occasion, we hosted a bustling happy hour at our San Francisco office. The event featured drinks and networking, along with a lively panel discussion on the evolving CISO technology stack. Panelists included Hanan Szwarcbord, Chief Security Officer at Micron Technology, and David Tsao, CISO at Instacart, with expert moderation by our very own Sid Trivedi. As RSA wound down, I caught up with a handful of Foundation’s CEOs to get their takeaways. Here’s what they relayed:

- Cybersecurity embraces the AI hype: Keynotes were brimming with updates on generative AI integrations from big-name companies like Microsoft, Google, Cisco, and IBM. Numerous startups are also addressing challenges in this domain, with lots of excitement around AI copilots for cybersecurity. An emerging opportunity appears to be protecting ML models against cyberattacks.

- Point solution fatigue: As cloud security products and acronyms continue to proliferate, customers are beginning to express fatigue. In a shift from the best-of-breed approach historically favored by the cybersecurity community, major vendors like Palo Alto Networks and Microsoft were pitching their offerings as single platform plays. In light of these trends, it’s critical for startups to differentiate by developing easy-to-deploy POCs that demonstrate clear value in weeks rather than months.

- Cybersecurity as an enabler: As layoffs mount, cybersecurity leaders are recognizing the need to boost efficiency across business units while still mitigating risk. This means integrating cybersecurity into employees’ daily workflows, such as securing code during the development process for software engineers and providing cyber-awareness training for sales professionals.

Forbes AI 50

More big news! Three companies from my portfolio—Arize, Eightfold, and Databricks—as well as one of my partner Joanne’s investments, Jasper, have been featured on the distinguished Forbes AI 50 list. Congratulations to our dedicated founders and to my colleague, Joanne Chen, for leading Foundation’s investment in Jasper!

Talking AI with Microsoft’s Chief Strategy Officer

Speaking of enterprise AI, I recently sat down with Bobby Yerramilli-Rao, an accomplished entrepreneur and investor, who now serves as Microsoft’s Chief Strategy Officer. We explored Bobby’s perspective on the sectors and problem spaces that are primed for AI-driven innovation, along with the opportunities available for startups. We then dove into the four layers of the AI stack: applications, platforms, infrastructure, and models. Lastly, we surveyed the many flavors of AI beyond LLMs. I came away with a more expansive understanding of our current AI moment and the immense promise it holds for both enterprises and society. Tune in.

Portco Spotlight: Arize

To top off this month’s flurry of AI updates, I’d like to spotlight Foundation’s portfolio company (and Forbes AI 50 member!) Arize. Arize’s automated model monitoring and observability platform helps ML teams deliver and maintain high-performing AI in production. With Arize, ML teams can quickly identify emerging issues, troubleshoot why they happened, and improve overall model performance across both structured and unstructured data.

Last week, Arize hosted its two-day Observe 2023 summit, the largest ML conference of the year, with 70+ speakers and 5,000+ registrants. This year’s theme: ML observability in the age of generative AI. To mark the event, the Arize team unveiled Phoenix: the first open-source observability library specifically designed to evaluate outputs from LLMs and other generative AI models. With Phoenix, data scientists can visualize intricate LLM decision-making processes, monitor LLMs for false or misleading results, and pinpoint solutions to optimize outcomes. Check it out for yourself here!

Published on 05.09.2023

Written by Ashu Garg