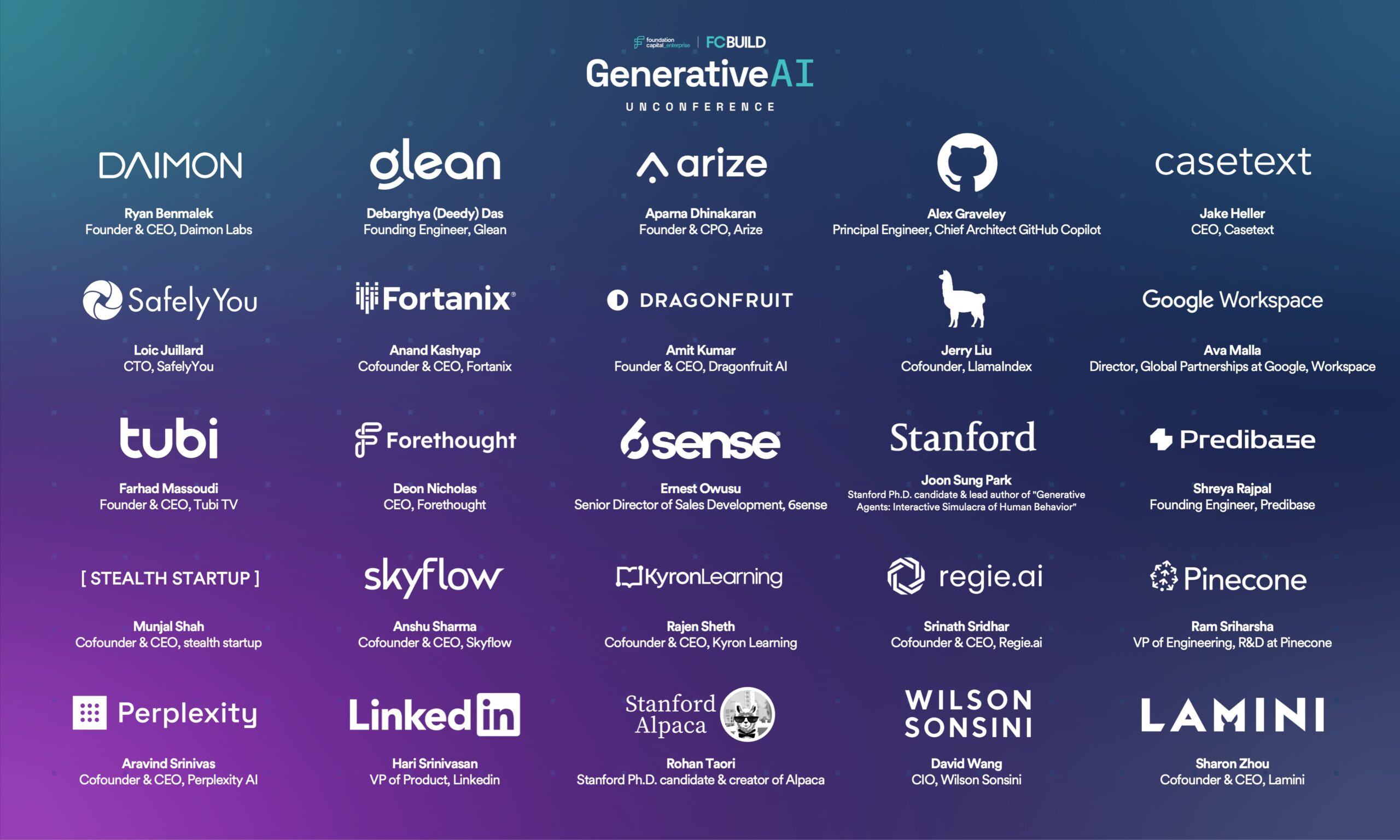

In early May, we hosted our inaugural, invite-only Generative AI “Unconference” in San Francisco. The event convened over eighty of the top (human!) minds in AI, including founders, researchers, and industry experts, for an energizing afternoon of group discussions about building successful AI companies. Seizing on this momentum, we launched an open call for the “Foundation Capital Generative AI 25”: our ranking of the 25 most influential, venture-backed, for-profit companies that are defining the generative AI field, curated by a panel of independent experts.

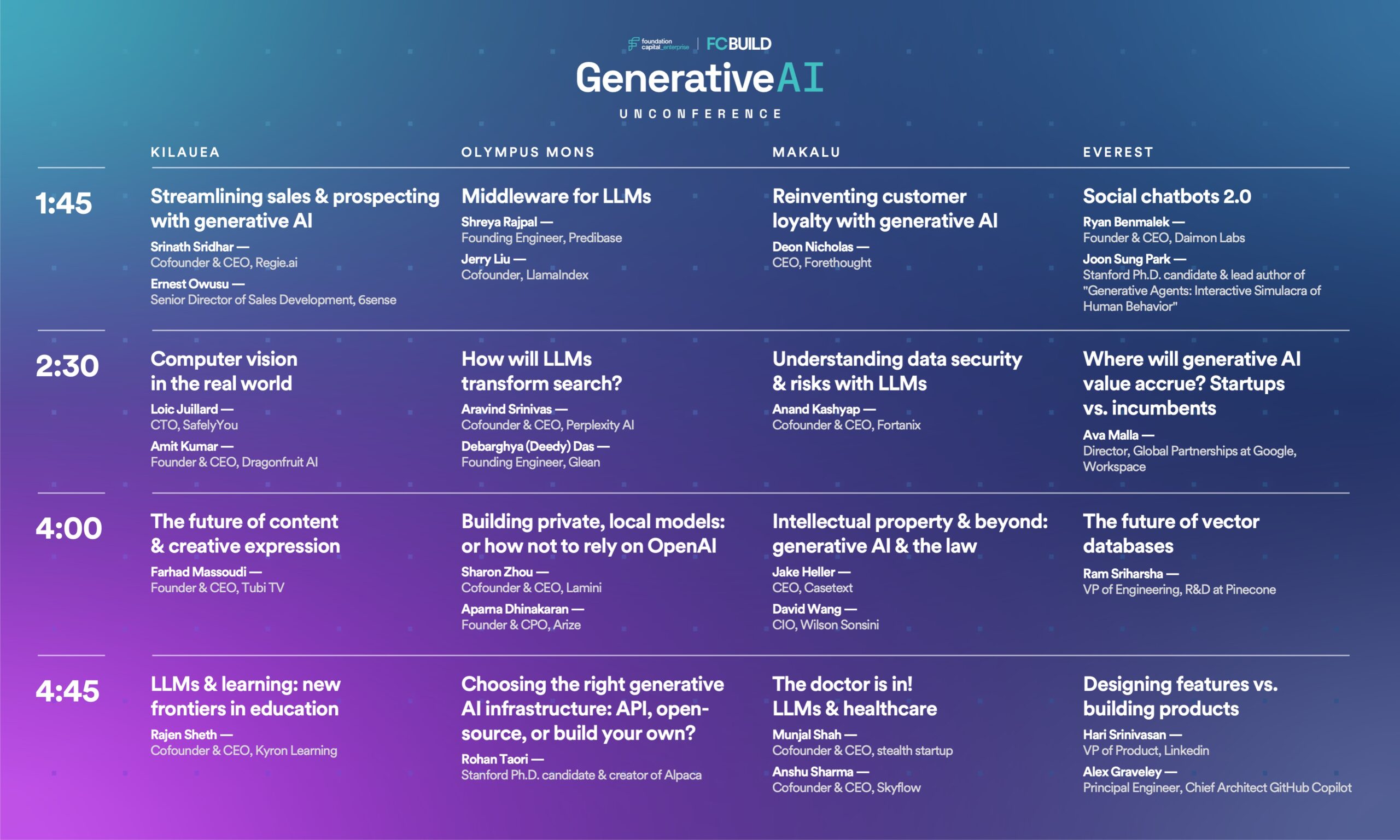

Today, we’re excited to share the full schedule for the day, along with our top seven takeaways. While we can’t divulge every detail (all sessions were intentionally off the record), we hope that this post offers a wealth of crowdsourced wisdom—and perhaps even inspires you to apply to the “Generative AI 25”!

Our run of show for the day

Without further ado…

Here’s What We Learned:

In AI, as in other technology waves, every aspiring founder (and investor!) wants to know: Will incumbents acquire innovation before startups can acquire distribution? In “Where will generative AI value accrue? Startups vs. incumbents,” we explored this topic with the director of global partnerships at Google. We also pooled perspectives from generative AI builders across a broad range of fields, including customer success (“Reinventing customer loyalty with generative AI”), education (“LLMs & learning: new frontiers in education”), entertainment (“The future of content & creative expression”), healthcare (“Computer vision in the real world” and “The doctor is in! LLMs & healthcare”), law (“Intellectual property & beyond: generative AI & the law”), and sales (“Streamlining sales & prospecting with generative AI”),

Our takeaway: While incumbents benefit from scale, distribution, and data, startups can counter with business model innovation, agility, and speed—which, with the present, supersonic pace of product evolution, may prove more strategic than ever. To win, startups will have to lean into their strength of quickly experimenting and shipping. Other strategies for startups include focusing on a specific vertical, building network effects, and bootstrapping data moats, which can deepen over time through product usage.

The key distinction here lies between being an “AI integrator” (the incumbents’ route) and being an “AI native” (unique to startups). Incumbents can simply “add AI” to existing product suites, which places startups at a distribution disadvantage. By contrast, “AI natives” can design products from the ground up with AI at their core. Architected around net-new capabilities that make them the proverbial “10x better,” AI-native versions of legacy products can capture meaningful market share from (or even unseat!) incumbents, who are burdened by product debt. The challenge for startups is determining where these de novo, AI-native product opportunities lie. Because startups thrive on action and speed, the best way for founders to figure this out might simply be to jump in and try!

Another hot-button question at the Unconference: How can builders add value around foundation models? Are domain-specific data and customizations the solution? Does value accrue through the product experience and serving logic built around the model? Are there other insertion points that founders should explore?

We sought answers in “Designing features vs. building products,” co-led by the principal engineer of GitHub Copilot and a VP of Product at LinkedIn. We then dove into the technical nitty gritty in “Choosing the right generative AI infrastructure: API, open-source, or build your own?” and “The future of vector databases,” with the creator of Alpaca and the VP of engineering at Pinecone.

Here’s the gist: While foundation models will likely commoditize in the future, for now, model choice matters. From there, an AI product’s value depends on the architecture that developers build around that model. This includes technical decisions like prompts (including how their outputs are chained to both each other and external systems and tools), embeddings and their storage and retrieval mechanisms, context window management, and intuitive UX design that guides users in their product journeys.

As our session leaders emphasized, it’s important to remember that generative AI is a tool, not a cure-all. The time-tested rules of product development still hold true. This means that every startup must begin in the old-fashioned way: by identifying a pressing problem among a target user base. Once a founder fully understands this problem (ideally, by talking to many of these target users!), the next step is to determine if and why generative AI is the best tool to tackle it. As in previous waves of product innovation, the best AI-native products will solve this problem from beginning to end.

Generative AI has repeatedly been likened to a “gold rush,” with many words spilled about the “picks and shovels” needed to enable it. Yet, as we dove into “Middleware for LLMs” with founders of Predibase and LlamaIndex, we found that this analogy doesn’t quite fit with generative AI. The main reason? The pace at which the underlying models are evolving, which makes it difficult to distinguish point-in-time problems from enduring elements of a new “foundation model Ops” stack.

One potential solution that surfaced: Instead of conceiving infrastructure and applications as discrete, linear stages—with infrastructure coming first, followed by applications—it may be more productive to view them as intertwined, co-evolving phenomena. Previous platform shifts, from the advent of electricity to the rise of the internet, reveal that innovation cycles often begin with the development of applications (the lightbulb, email), which motivate the development of supporting tools (the electric grid, Ethernet). These tools, in turn, pave the way for more applications, which drive the creation of further tools, and so on. Rather than design “picks and shovels” for a hypothetical future, infrastructure builders should tap into this virtuous cycle and keep as close as possible to their peers at the application layer.

Which brings us to our next learning: Generative AI is not just a technology, it’s also a new way of engaging with technology. LLMs enable forms of human-computer-AI interaction that were previously unimaginable. By allowing us to express ourselves to computers in fluent, natural language, they make our current idiom of clicking, swiping, and scrolling seem antiquated in comparison.

This new UI paradigm creates outsized opportunities for startups (the “AI natives”) while posing a potentially existential threat to incumbents (the “AI integrators”). Consider ChatGPT, still far and away the fastest-growing startup in history. In essence, it’s two things: an LLM (GPT-3.5, followed by GPT-4) with an intuitive interface on top. Aligning GPT-3 to follow human instructions through supervised learning and human feedback was an important first step. Yet, alongside OpenAI’s technical breakthroughs, it was an interface innovation that made generative AI accessible and useful to everyday users.

Our discussion with Perplexity’s cofounder and CEO, Aravind Srinivas, “How will LLMs transform search?”, unpacked these developments. As the group affirmed, digital interfaces are our portals to the internet—and, consequently, to the world. For years, Google’s search box has mediated (and, in some sense, determined) our experience of the web. The consensus in the room held that generative AI will replace the traditional search box with a more natural, conversational interface. As Aravind puts it, a shift is underway from “search engines” to “answer engines.”

Today, our interaction with Google also resembles a conversation, albeit a very clunky one. We ask Google a question, and it responds with a series of blue links. We click on a link, which sends another message to Google, as does the time we spend on the page, our behavior on that page, and so on. LLMs can transform this awkward conversation into a fluent, natural-language dialogue. This idiom—not “Google-ese”—promises to become the default interface not simply for search, but for all our interactions with computers.

The shift gives many reasons for optimism. As the session highlighted, answer engines powered by LLMs could give rise to a more aligned internet that’s architected around users, not advertisers. In this new paradigm, users pay for enhanced productivity and knowledge, rather than having their attention auctioned to the highest bidder. As conversational interfaces gain traction, existing modes of internet distribution could be upended, dealing a further blow to incumbents. Who needs to visit Amazon.com to order paper towels when you can simply ask your AI?

As we learned from the founder of Daimon Labs and a leading Ph.D. researcher of generative agents in “Social chatbots 2.0,” the chat box is just the beginning of where AI-first interfaces will go. To paraphrase Dieter Rams, effective design clarifies a product’s structure; at its best, it’s self-explanatory, like a glove that slides seamlessly onto your hand. Today, many generative AI applications anchor on an open-ended interface that’s akin to a “blank page,” with no indication of how it can be best used. The only clue that I, the user, receive is a blinking cursor that encourages me to input text into the box.

This lack of signposts leads to a flood of follow-on questions: What does this sibylline AI know? How should I phrase my question? Once I receive an answer, how do I determine if the AI understood me and assess its response? Faced with a blank text box, the onus falls on me to express my intent, think of all the relevant context, and evaluate the AI’s answer. Then comes the problem of “unknown unknowns”: the fact that I lack visibility into the full possibility space of the model.

In the session, we explored how to create interfaces that allow users to intentionally navigate this space, rather than aimlessly bumble through it. We broached forms of expression beyond text, such as images, video, and audio. We also tackled creating workflows that collect proprietary data—and keep users engaged enough to willingly contribute it. The challenge: Design a generative AI interface that motivates users to provide feedback, without making them feel like they’re being mined for data.

We left the discussion eagerly expecting much more innovation on this front, including chatbots that are voice first, equipped with tools, and capable of asking clarifying questions, as well as generative agents that can simulate human social behavior with striking precision. The consensus in the room? We’re just one to two UX improvements away from a world where AI mediates all human communication. This interface revolution puts immense value, previously controlled by big tech, up for grabs.

Spring 2023 may be remembered as AI’s “Android moment.” Meta AI’s LLaMA, a family of research-use models whose performance rivals that of GPT-3, was the opening salvo. Then came Stanford’s Alpaca, an affordable, instruction-tuned version of LLaMA. This spawned a flock of herbivorous offspring, including Vicuna, Dolly (courtesy of Databricks), and Koala, along with an entire family of open-source GPT-3 models from Cerebras. Currently, Vicuna ranks fifth on LMSYS Org’s performance leaderboard behind proprietary offerings from OpenAI and Anthropic.

So far, much of the progress in deep learning has been in open source. Many of our Unconference participants—including one of our keynote speakers, Robert Nishihara, cofounder and CEO of Anyscale—are betting that this will continue to be the case. As Robert sees it, today, model quality and performance are the top considerations. Soon, we’ll reach the point where all the models are “good enough” to power most applications. The differentiation between open source and proprietary will then shift from model performance to other factors, like cost, latency, ease of use and customization, explainability, privacy, security, and so on.

Two sessions focused on the last two points: “Building private, local models: or how not to rely on OpenAI” and “Understanding data security & risks with LLMs.” As the cofounders of Lamini, Arize, and Fortanix explained, many companies are reluctant to feed their intellectual property to third-party model providers. Their fear is that, if this data is used in training, it could be baked into future versions of the model, thus creating openings for both unintentional leaks and malicious extraction of mission-critical information. These concerns are lending further tailwinds to open source, with many businesses considering running bespoke generative models within their own virtual private clouds.

Looking forward, open source will continue to improve through a combination of better base models, better tuning data, and human feedback. Proprietary LLMs will also advance in parallel. Many companies will pursue hybrid architectures that combine the best of both options. But more and more general use cases—one of our session leaders pegged it at 99% plus—will be covered by open-source models, with proprietary models reserved for tail cases.

Bigger models and more data have long been the go-to ingredients for advancements in AI. Yet, as our second keynote speaker, Sean Lie, founder and chief hardware architect at Cerebras, relayed, we’re nearing a point of diminishing returns for supersizing models. Beyond a certain threshold, more parameters do not necessarily equate to better performance. Giant models waste valuable computational resources, causing costs for training and use to skyrocket. What’s more, we’re approaching the limits of what GPUs (even those carefully designed to train AI workloads) can support. Sam Altman echoed Sean’s stance in a talk at MIT last month: “I think we’re at the end of the era where it’s going to be these, like, giant, giant models. We’ll make them better in other ways.”

Enter small models. Here, efficiency is the guiding mantra, with better curated training data, next-generation training methods, and more aggressive forms of pruning, quantization, distillation, and low-rank adaptation all playing enabling roles. Together, these techniques have boosted model quality while shrinking model size. GPT-4 cost over $100 million to train; Databricks’ Dolly took $30 and an afternoon. Sean gave us the inside scoop on Cerebras’s GPT-3 models. Optimized for compute using the Chinchilla formula, Cerebras’s models boast lower training times, costs, and energy consumption than any of their publicly available peers.

These compact, compute-efficient models are proving that they can match, and even surpass, their larger counterparts on specific tasks. This could usher in a new paradigm, where larger, general models serve as “routers” to downstream, smaller models that are fine-tuned for specific use cases. Instead of relying on one mammoth model to compress the world’s knowledge, imagine a coordinated network of smaller models, each excelling in a particular domain. Here, the larger model functions as a reasoning and decision-making tool rather than a database, delegating tasks to the most suitable AI or non-AI system. Picture the large, general model as your primary care doctor, who refers you to a specialist when needed.

This trend could be game changing for startups. Maintaining models’ quality while trimming their size promises to further democratize AI, leading to a more balanced distribution of AI power and value capture. Beyond better economics and speed, these leaner models offer the security of on-premise and even on-device operation. Imagine a future where your personal AI and the data that powers it live on your computer, ensuring that your “secrets” remain safe. Our advice: Watch this space closely for developments in the coming months.

Published on 06.16.2023

Written by Joanne Chen and Jenny Kaehms