Decoding AGI

I explain why we need to understand this technology before we rush to regulate it. I share insights from my conversation with AI pioneer Naveen Rao on the path to achieving it.

12.22.2023 | By: Ashu Garg

As we look toward 2024, I want to weigh in on a topic that’s been getting lots of attention lately: artificial general intelligence, or AGI. Until recently, discussions of AGI were considered fringe: the stuff of science fiction, rabbit-hole Reddit forums, esoteric academic debates. Then came ChatGPT. This was the moment when “AGI stopped being a dirty word,” as Ilya Sutskever, co-founder and Chief Scientist at OpenAI, put it.

AGI enthusiasts foresee a future where this technology solves our most intractable challenges, revolutionizing healthcare, eradicating diseases, democratizing education, boosting the economy, and reversing global warming. Sam Altman sees AGI as a gateway to “the best world ever.” AGI doomers hold a markedly different view: they believe that AGI could spell the end of humanity.

Amid this mix of excitement and fear, we still lack a clear understanding of what AGI truly is. That’s because it’s a profoundly complex concept, intertwined with fundamental questions about how to define and measure “intelligence,” both in humans and in the machines we aim to create. This ambiguity, coupled with the hype, is triggering numerous—and, in my view, very hasty—efforts at regulation.

As I see it, AGI is several decades away, and the anxiety around it is greatly exaggerated. What’s clear to me is that our discussions about AGI need to be much sharper and more focused. In this month’s newsletter, I trace AGI’s history and present several definitions for the term. I close with insights from my recent conversation with Naveen Rao, co-founder of MosaicML and current head of generative AI at Databricks, about what this all means for entrepreneurs and the innovation economy.

Sounding the alarm

Last month’s tumult at OpenAI brought the stakes of the AGI debate down from theory and into the business world. OpenAI’s goal has long been clear: develop AGI that “benefits all of humanity.” Many reports (all unconfirmed) speculate that Altman’s ouster was due to a clash between two AI development philosophies. On one side, AI-safety advocates like Sutskever, who co-leads OpenAI’s “superalignment” team, promote a cautious, controlled approach. On the other, Altman and his framework of “iterative deployment” represent a push for more rapid innovation.

At the same time, rumors swirled about Q*, OpenAI’s new model that’s reportedly able to solve basic math problems—a major leap forward from GPT-4. This advance from simple prediction to multi-step, abstract reasoning could mark a significant step toward human-level cognitive skills. Some believe that Sutskever was involved in this breakthrough and, upon realizing its implications, decided to slam on the brakes.

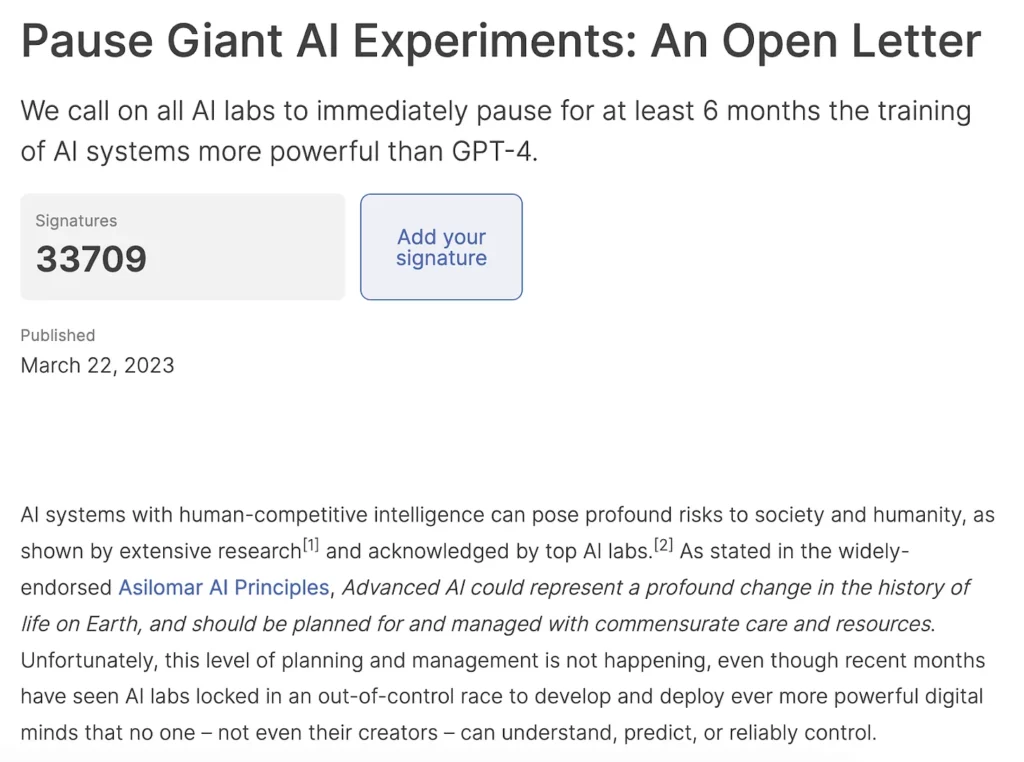

The post-ChatGPT alarm first started to sound in a major, public way in March. Following the release of GPT-4 on March 14, more than 1,000 industry leaders, including Yoshua Bengio, Elon Musk, and Yuval Noah Harari, signed an open letter urging a six-month pause in advanced AI development, citing “profound risks to society and humanity.”

What these risks are depends on your timeline. Short term, there’s worry about manipulated media and misinformation. Medium-term concerns include job displacement, the rise of AI friends and lovers, and strengthening of authoritarian regimes. Long term, technologists like Ian Hogarth fear a “God-like AI” that could make humans obsolete or extinct.

Alarmed by AI’s prospective perils, governments globally are acting to mitigate them. In October, the Biden administration issued a 20,000-word executive order on AI safety. Earlier this month, the EU agreed on sweeping AI legislation, which includes disclosure requirements for foundation models and strict restrictions on the use of AI in law enforcement.

Zooming out, the global policy response to AI remains highly uneven and hampered by governments’ limited AI expertise. U.S. lawmakers openly admit that they barely understand how AI works. This admission alone should give us pause about the efficacy of their regulatory efforts.

Worsening matters is the amorphous nature of AGI. Before we rush into regulation, we must first understand AGI clearly. To do so, let’s explore AGI’s history and survey several definitions for what it might entail.

What is AGI?

In my current understanding, AGI is an AI system that can independently reason and generalize this skill (which includes the ability to “think” and autonomously make decisions) across a wide variety of domains.

I’ll caveat that defining AGI is like aiming at a moving target. This is due to the “AI effect”: once a groundbreaking AI technology becomes routine, it’s no longer viewed as “true AI.” As we edge closer to AGI, our expectations for it will expand, pushing the threshold for true AGI even further out.

For decades, mastering chess was seen as the litmus test for true machine intelligence. In 1997, Deep Blue beat world chess champion Gary Kasparov, and the bar got higher. Today, Waymos autonomously navigate the streets of San Francisco, making intricate decisions in real time. Yet this achievement too doesn’t seem to meet the ever-escalating criteria for AGI.

AGI was first proposed in 2007 by DeepMind co-founder Shane Legg as the title for fellow researcher Ben Goertzel’s book on future AI developments. Legg intended AGI as an ambitious and deliberately ambiguous term. His intention was to draw a distinction from “narrow AI,” like IBM’s chess-playing Deep Blue, that excel at specific tasks. AGI, by contrast, denotes a versatile, all-purpose AI, capable of generalizing across a wide range of problems, contexts, and domains of knowledge.

Since then, AGI’s exact definition has remained an open question. To date, there’s no consensus about what constitutes AGI, nor are there standardized tests or benchmarks to measure it. The most common definition that I’ve found is an AI matches or exceeds human capabilities across diverse tasks. OpenAI describes AGI as “AI systems that are generally smarter than humans.” Sutskever echoed this definition in his October TED talk:

“As researchers and engineers continue to work on AI, the day will come when the digital brains that live inside our computers will become as good and even better than our own biological brains. Computers will become smarter than us. We call such an AI an AGI, artificial general intelligence, when we can say that the level at which we can teach the AI to do anything that, for example, I can do or someone else.”

In both examples, the criteria for human-level intelligence, the scope of tasks included, and the depth of capabilities needed for AGI are not specified. The observer is left to presume that AGI is like AI, but much, much better.

AGI’s ambiguity is closely tied to the underlying fuzziness of “intelligence.” In a 2006 paper, Legg and cognitive scientist Gary Marcus highlighted this by compiling over 70 different definitions of intelligence. These encompass the abilities to learn, plan, reason, strategize, solve puzzles, make judgments under uncertainty, and communicate in natural language. Other definitions extend to traits like imagination, creativity, and emotional intelligence, as well as the ability to sense, perceive, and take action in the world.

The broad scope of intelligence further muddies our grasp of what AGI is and how we’ll know when it’s here. Just two days before being fired from OpenAI, Altman dismissed the term as “ridiculous and meaningless” and apologized for popularizing it. “There’s not going to be some milestone that we all agree, like, OK, we’ve passed it and now it’s called AGI,” he argued. Later, he suggested that a system capable of making novel scientific discoveries would count as “the kind of AGI superintelligence that we should aspire to.” By this definition, the ability to create new knowledge rather than merely reshuffle existing information is what sets apart AGI.

Altman’s mention of “AGI superintelligence” adds still more nuance to the AGI debate. To achieve AGI, does a system need to equal human intelligence or surpass it? If the latter, by how much, and against what benchmark of human intelligence should this be measured?

Reasoning and emergence

Debates over the nature of intelligence often fall back to the notion of reasoning. Itself an expansive term, reasoning denotes the wide range of processes by which the mind “thinks,” such as drawing conclusions from a set of premises (deduction), making generalizations from specific observations (induction), and using logic to make sense of incomplete information (abduction).

In the quest for “AGI superintelligence,” reasoning is a key ingredient. Many experts believe that limited reasoning skills are the main barrier between today’s frontier models and tomorrow’s AGI. While not specifically trained for reasoning, foundation models display behaviors that resemble this capability. Yet, it remains unclear if these models are actually reasoning, based on a fundamental understanding of the concepts at hand, or if they’re simply using learned patterns to make predictions.

On this, the research community is split. Some view LLMs as sophisticated forms of autocomplete that lack real “thought.” A December 2022 study found that GPT-based LLMs perform better on math problems that involve numbers they’ve encountered during pretraining. This implies that LLMs rely more on pattern matching than abstract numerical reasoning. An April 2023 paper went one step further, claiming that the “emergent abilities” of LLMs are a “mirage”: an effect of the methods and metrics that researchers use to evaluate them.

Other studies present a diverging view. Earlier this year, the Othello-GPT experiment (based on the strategy game of the same name) signaled that AI models can produce “models of the world” that are akin to human “understanding.”

More impressive still, a recent paper in Nature by researchers at DeepMind reveals that LLMs can discover new knowledge. Their study introduces “FunSearch,” a method that pairs a fine-tuned LLM with an evaluation system that filters out incorrect responses. This approach led to previously unknown solutions to the cap set problem: a long-standing challenge in pure math that involves finding the largest size of a certain type of set. This problem is notoriously difficult, with potential solutions exceeding the number of atoms in the universe. DeepMind’s breakthrough marks a first for LLMs, proving their potential for true innovation.

The risks of AI regulation

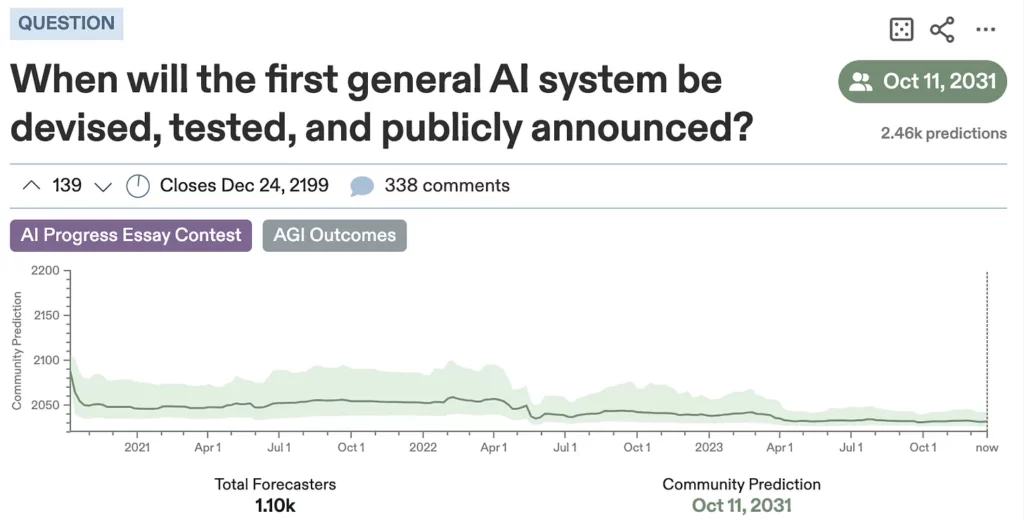

Public prediction markets peg the median date for the arrival of AGI to 2031. Yet, in my view, we’re still more than a decade away. Fears around AGI seem greatly inflated. I’ll concede that the risk of an AI apocalypse is non-zero. Yet it seems as likely as discovering that your pet is secretly a mastermind plotting world domination.

At core, AI marks a continuation of computers’ progressive ability to process and analyze data. The threats of misuse and adverse outcomes are real, but they’re not unique to AI. Every technology, from the discovery of fire to CRISPR, carries the potential for both immense benefits and far-reaching harms.

In my most recent podcast, I explore these topics with Naveen Rao. As the founder of two pioneering AI companies—Nervana, an AI-focused chip startup, acquired by Intel in 2016, and MosaicML, a platform for creating and customizing generative AI models, acquired this June by Databricks—Naveen has a better pulse on this debate than nearly anyone I know. He places the probability of achieving AGI in the next three years as “virtually zero.” “I could say maybe we’re at a 50% or 30% chance in 10 years. Maybe by 30 years, we’re in the 90% chance range,” he contends.

The real danger lies not in rogue AI, but in hastily constraining a technology that we don’t yet understand. Naveen’s take is clear: “You can’t make rules and laws before you understand the problem.” He compares regulating generative AI to setting traffic laws in 1850 without knowing what cars, accidents, or liability look like. Much like the internal combustion engine in its early days, generative AI’s future applications are largely unknown. Rushing to regulate AI risks stalling progress on these unforeseen yet immensely beneficial use cases.

Moreover, many of the people who most loudly warn of AGI’s risks have financial motives. To be sure, some are sincerely terrified about human extinction. Yet many others are self-interested opportunists who stand to profit from restrictive laws that shield them from startup and open-source competition.

Why we need more AI, faster and sooner

The key point is this: there’s no immediate need to regulate AI. What we’re seeing is regulation in search of a problem that doesn’t yet exist. Concerns that regulators frequently raise, like misinformation, algorithmic discrimination, and job displacement, are not unique to AGI: they’re tech-wide issues.

Today’s attempts at AI regulation are more knee-jerk than constructive. Regulators always have a vested interest in making regulations. Stung by their slow response to social media and crypto, many are now overly eager to rein in AI. Their efforts are fueled by widespread public pessimism about AI and broad distrust of the tech industry.

As an unwavering AI optimist, I believe that we need more AI in the hands of more people as soon as possible. Advancing AI is the only way to understand its capabilities and prepare for its impacts, both positive and negative. For me, the central question is whether more intelligence, both human and artificial, will make our world better. My stance is emphatically “yes.” In light of the urgent challenges that we face globally, the cost of stifling AI’s progress is simply too great. We must propel AI forward, not hold it back.

Published on 12.22.2023

Written by Ashu Garg