Beyond RPA: How LLMs are ushering in a new era of intelligent process automation

03.21.2024 | By: Joanne Chen, Jaya Gupta

At its core, every business is a collection of processes. From lead generation and customer acquisition to financial planning and procurement, these processes are interconnected, with the outputs of one providing the inputs for another. As a business grows, so does its number of processes, creating an intricate network of data flows and dependencies.

To help manage this complexity, businesses began adopting an early form of intelligent process automation—Robotic Process Automation, or RPA—in the early 2000s. RPA allows companies to automate repetitive, rule-based tasks using software robots, or “bots,” freeing up humans to focus on more complex, valuable work.

In its initial days, RPA relied on rule-based automation. This worked well for simple, structured processes yet resulted in rigid bots that could only handle tasks with specific, predetermined steps. These bots generally lacked AI, meaning they couldn’t learn, adapt, or improve over time. Even when RPA solutions did incorporate some AI, they were limited to working with structured data, leaving the vast amount of enterprise data that’s unstructured (like emails, documents, and images) untapped. According to many analyst estimates, unstructured data makes up 80–90% of all business data.

These limitations led to mixed results for RPA adoption. Despite some early successes, RPA fell short of the enterprise-wide deployments predicted by consulting firms like McKinsey in 2017 and 2019. An EY study found that 30-50% of RPA projects fail, while a Deloitte survey revealed that only 3% of companies were able to successfully scale their RPA initiatives.

Recent advances in AI are poised to change this. By integrating LLMs, bots gain powerful new capabilities that significantly expand their potential applications, heralding a transition from RPA bots to autonomous AI agents that can work alongside humans in almost any domain.

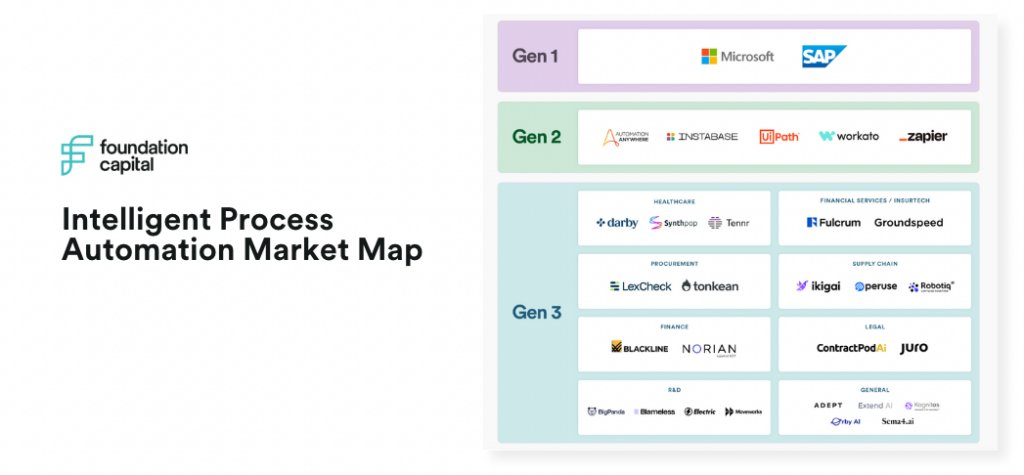

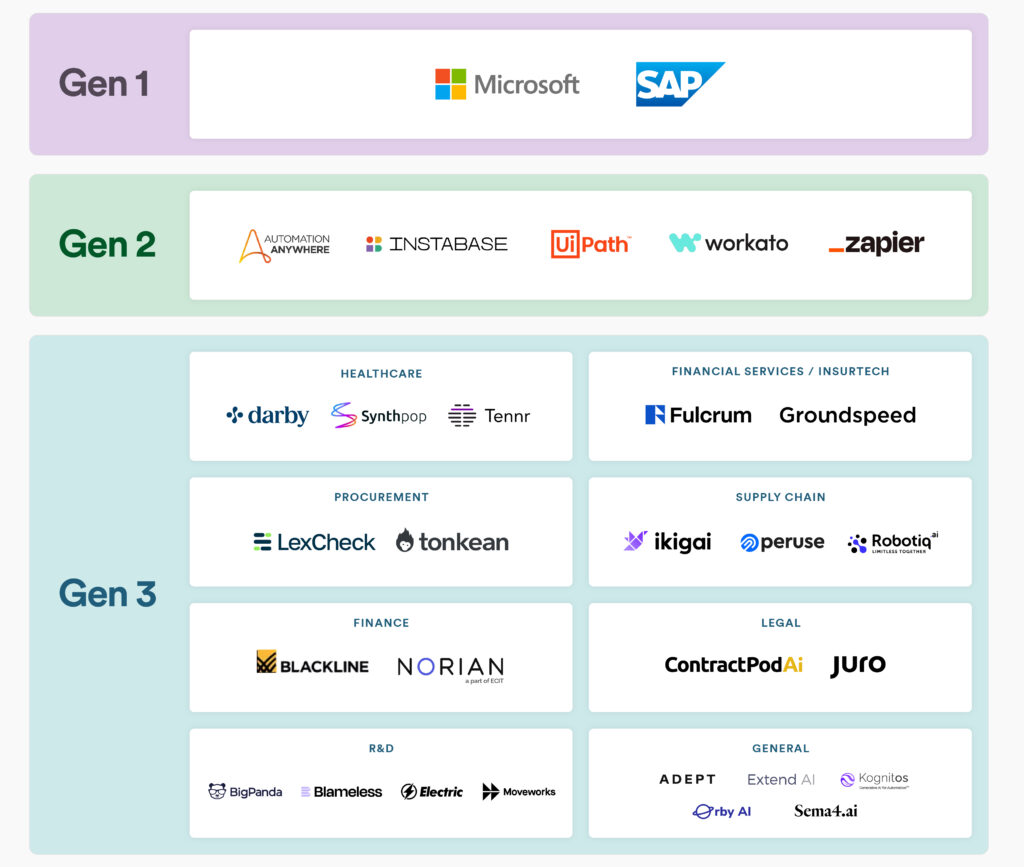

In this post, we’ll trace the evolution of intelligent process automation across three generations, each marked by advancements in underlying technologies and expanding use cases. Starting with the rule-based automation of the early 2000s, we’ll move on to the cloud-based bots of the mid-2010s, and finally explore the LLM-powered agents that are emerging today.

It’s our belief that, taken together, LLMs’ novel capabilities prime the market opportunity for intelligent process automation to grow by at least 10x in the coming decade. This makes it an extremely promising space for AI-focused founders, especially those with expertise in domains that have traditionally been underserved by automation.

Generation 1: On-prem, rules-based automation

The early 2000s saw the advent of RPA, led by tech giants like Microsoft and SAP. These solutions focused on automating simple, repetitive tasks that were common in back-office functions, such as entering data, processing invoices, reconciling financial records, and handling insurance claims.

First-generation RPA bots followed strict, hard-coded instructions. If a task deviated from its predefined rules, the bot would either stop working or make mistakes without realizing it. As companies grew and their processes became more complex, the limitations of these rules-based bots became increasingly apparent. Even small changes, like a slight modification to a form field, could derail an entire automated workflow. Keeping these RPA bots up and running required constant monitoring, updates, and rebuilding.

Many companies approached RPA as a band-aid for outdated IT systems. This led to significant integration challenges and costs, as the bots had to be customized to work within legacy software environments. Setting up an RPA solution usually required hiring specialized consultants who spent weeks or months mapping out the company’s existing tech stack and processes.

This lengthy setup process, combined with the cost of on-prem infrastructure and ongoing maintenance, made it difficult for organizations to achieve high ROI on their RPA investments. Scaling these initiatives across the enterprise was even more challenging, as each new process or system required its own customized bot.

Generation 2: Cloud-based, horizontal solutions

By the mid-2010s, a new generation of RPA bots came to market, characterized by cloud-based platforms. Companies like Automation Anywhere, UiPath, and Zapier developed horizontal solutions that could handle tasks that spanned multiple business functions and software systems.

These second-generation RPA bots worked by capturing the business logic of a process and integrating with other software tools, such as email clients, chat apps, cloud storage services, CRMs, and ERP systems. Once configured, they could automatically manage requests, orchestrate workflows across multiple systems and teams, and ensure data consistency across an organization’s entire tech stack.

The rise of cloud computing and APIs were key enablers of this more expansive approach to RPA. Cloud-based platforms allowed companies to use RPA without significant upfront investments in hardware and IT infrastructure. APIs made it easier to connect RPA bots with multiple databases and software applications, reducing the need for custom integrations. API-based automations also proved far more stable and resilient than their first-generation, GUI-based counterparts, which were prone to breakdown when elements of an interface changed.

Second-generation RPA solutions also started incorporating AI. This allowed bots to tackle more complex tasks that required some level of judgment or understanding of unstructured data. However, these AI capabilities were often narrow in scope and still relied heavily on structured data and predefined workflows.

Generation 3: LLM-powered agents

Today, we’re seeing the rise of a third generation of intelligent process automation, driven by the rapid advancement of LLMs and other generative AI technologies. Unlike previous generations, where AI was often an add-on or afterthought, these new solutions are being built with generative AI at their core.

In a step-change improvement from first- and second-generation RPA bots, LLMs can power intelligent agents that can grasp context, interpret user intent, apply reasoning to make complex decisions, and adapt to new tasks. Importantly, they can work with unstructured data: a capability that opens up verticals like healthcare, financial services, and legal that have been harder to automate due to their reliance on data that doesn’t fit neatly into structured databases. LLMs can also be fine-tuned on data specific to these domains, allowing them to develop a deep understanding of the terminology, concepts, and workflows that characterize each one.

Because LLMs can understand and generate natural language, they can do the same with code—thus unlocking high-value, end-to-end automations in software development and maintenance. Equipped with both natural language and image understanding, generative models allow agents to navigate any digital environment, including websites, software applications, and databases, using virtual tools like keystrokes, mouse clicks, and scrolling. LLMs can also interact with APIs, granting agents access to a wide range of software tools and services. Eventually, this will allow users to simply express their desired goals in simple text without needing to specify the steps required to achieve them.

The rise of vertical-specific solutions

As LLM-powered process automation continues to mature, we expect to see a proliferation of AI-native, vertical-specific solutions that can automate increasingly sophisticated cognitive tasks.

Startups like Tennr and Ikigai are already demonstrating the potential of this approach.

Tennr ’s LLM-based platform streamlines back-office operations for medical practices, handling tasks like scheduling patient appointments, coordinating referrals, auditing insurance claims, managing medical records, and posting payments.

Ikigai focuses on transforming supply chain management. It’s using LLMs to aggregate data from diverse sources, like purchase orders, vehicle tracking information, and IoT sensors, and provide real-time visibility across an entire value chain. This allows its models to detect and mitigate potential issues: for example, by rerouting orders to prevent production disruptions caused by shipment delays or adjusting production and inventory based on predicted demand.

Other startups like Tonkean and Fulcrum are addressing industry-specific challenges in areas like procurement, financial services, and insurance. As these solutions mature, they’ll unlock new opportunities for automation across virtually every business function and industry.

In the market map below, we’ve outlined the key players in each generation of intelligent process automation, along with some of the vertical-specific opportunities in this current generation that we’re most excited about.

Call for startups

At Foundation, we believe that the convergence of LLMs, generative AI, and agent architectures will drive exponential growth in the adoption of intelligent process automation in enterprises. Workflows once considered too complex to automate will soon be delegated to intelligent agents capable of navigating interfaces, reasoning through problems, and interacting with APIs to complete tasks and autonomously take actions.

If you’re a founder with a vision for applying these technologies to transform how businesses operate, we’d love to hear from you: jchen@foundationcap.com and jgupta@foundationcap.com. We back entrepreneurs from the earliest stages—it’s never too soon to reach out.

Published on 01.31.2023

Written by Foundation Capital