Beyond LLMs: Building magic

06.27.2024 | By: Ashu Garg, Jaya Gupta

Large language models reshaped AI. Though they were invented in 2017, LLMs entered the mainstream with the November 2022 launch of ChatGPT, the fastest-growing consumer application of all time. Since then, there has been an explosion of innovation. LLMs are likely the most transformative technology since the web browser, changing everyday activities like internet search, image creation, and many forms of writing. Within the enterprise, LLMs have the potential to redefine most white-collar jobs and critical business functions.

In 2022, it felt like the race was over and OpenAI had won. Today, however, the race is back on, with a number of viable alternatives to the company’s ChatGPT and other GPT models. In 2023 alone, nearly 150 LLMs were released with models from Meta, Amazon, Google, Microsoft, Anthropic, and Mistral, all vying to supplant GPT-4. Overall, performance gaps have narrowed, and model prices are decreasing, with inference costs dropping by more than half in the past year.

Every CEO, whether they make computer chips or potato chips, has announced an AI strategy, and markets are anticipating profit improvements across the board as a result of AI. At the same time, enterprise deployment of LLM-based applications remains nascent. LLMs have plenty of shortcomings, including hallucinations, bias, and data exfiltration. There are also a host of adoption challenges, including the fact that most company data needs significant preprocessing before it can be used by a model, and use cases that worked in demos quickly break down when scaled up to production.

As founders begin to deploy LLM-based applications at scale, they should look to leverage three innovations:

- Multimodal models are moving beyond text, opening up new use cases.

- Multi-agent systems transform our ability to automate complex tasks.

- New model architectures address the limitations of transformers.

Here we’ll explore how all three will drive transformation across diverse sectors.

Multimodal models are moving beyond text

Multimodal AI means that the inputs and/or outputs can be text, images, audio, and video. OpenAI recently rolled out its GPT-4o model which does end-to-end processing of multimodal data within the same model, leading to significant advances in performance. One day after the OpenAI announcement, Google rolled out its own multimodal model, and Anthropic did the same last week.

All of this moves AI closer to human-like cognition and brings the physical world closer to the digital one. Think of multimodal models as a supercharged Siri or Alexa, where the model can incorporate context clues from audio (background noise, emotion, or tone) along with visual information (things like facial expressions and gestures). By integrating multimodal data, these models are generating richer insights, understanding not only a human’s words, but also those words’ nuanced meanings—and then using that understanding to respond to complex scenarios.

Multimodal capabilities open up a new realm of industry use cases. Customer service chatbots get a boost from faster and more accurate voice agents, resulting in more natural-seeming interactions. In healthcare, where diagnoses typically rely on a mixture of data types, new applications can have a huge impact. Color Health is working with OpenAI on a copilot app that uses GPT-4o in cancer patient treatment plans. The copilot extracts and normalizes patient information found in disparate formats, such as PDFs or clinical notes, identifying missing diagnostics and generating a personalized cancer screening plan. Beyond healthcare, multimodal AI will shake up fields that regularly deal with diverse data from both the physical and digital worlds, areas like robotics, e-commerce, and manufacturing.

The possibilities for content analysis and generation use cases are also extensive. Multimodal models can intake multiple content formats (videos, podcasts, presentations) and create outputs that combine audio, text, and visual elements. This could be useful in:

- Content repurposing: Marketing teams might quickly remix existing content into new formats or campaigns.

- Multilingual adaptation: Converting existing content to multiple languages for global distribution.

- Enhanced training: Creating immersive role-playing scenarios and interactive simulations for employee onboarding and skill development.

Multi-agent systems

While multimodal models expand the input and output capabilities of AI, combining multimodal models with agentic systems transforms our ability to automate complex tasks and processes.

So what is a multi-agent system? Such a system takes an overall goal, breaks it down into a series of steps, executes that sequence transferring output from one step to the next, and ultimately combines outputs to achieve an outcome. The system has agency because it plans and initiates actions independently based on its assessment of a given situation. An agent (or several) will make the decision to call multiple interacting systems and possibly even check in with a human if required.

Where an AI model generates output in a single pass, an agent is different in that it follows a process akin to humans approaching challenging goals. Agents gather information, draft initial solutions, self-reflect to identify weaknesses, and revise for optimal output. While model performance has improved exponentially, models by themselves are still akin to Q&A bots; human inputs a question, model answers a question (though the questions are increasingly difficult). Agents are a new paradigm; they resemble human beings, in that they take on projects with end-to-end accountability. Agents transform AI from a passive tool into an active team member.

Agents are a new paradigm. They transform AI from a passive tool into an active team member.

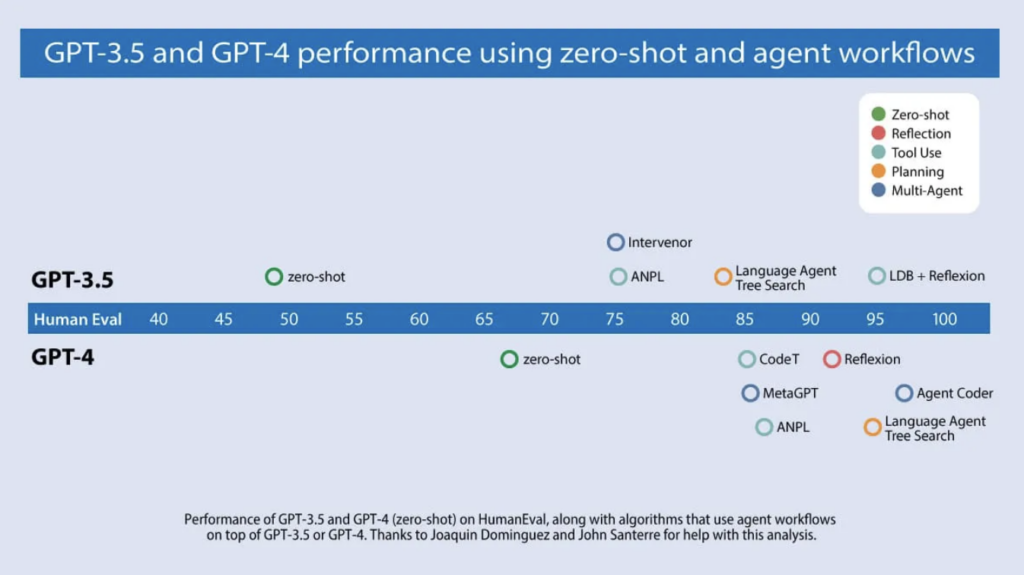

Recent research has demonstrated the power of collaborative agentic systems. Microsoft’s AutoGen project has shown that multi-agent systems can significantly outperform individual agents on complex tasks. In a coding challenge, Andrew Ng’s team tested an LLM alone vs an agentic system on the HumanEval benchmark (common for evaluating the functional correctness of code). With prompting, GPT-3.5 logged a 48% accuracy rate. When the LLM was wrapped in an “agent loop”—where agents used a number of techniques, including reflection (the LLM examines and improves its own work) and planning (the LLM devises and executes a multistep plan)—GPT-3.5 was 95% accurate. The dramatic improvement underscores the potential of multi-agent systems to tackle complex problems that stymie single-model, or even single-agent, approaches.

When multi-agent systems break complex plans down into smaller goals, they typically distribute those smaller goals to specialized “executor agents,” which are better at solving certain types of tasks. Back to the coding example, Ng and team found that dividing up coding tasks, letting one agent play software engineer, another play product manager, another play QA engineer, etc, brought the best results, especially when an agent focused on one thing at a time.

As we hand over more tasks to AI, agentic systems will require new designs, and many interfaces will need design overhauls. In a recent paper on agent-computer interfaces, John Yang and others introduced SWE-agent, an autonomous system that lets a model interact with a computer to tackle engineering tasks. They found that a custom-built interface was much more effective at creating and editing code than systems using RAG (SWE-agent solved 12.5% of issues, compared to 3.8% with RAG). Their more profound point: when an agent is in charge of work, the interface should be designed differently. GUIs and other interfaces with rich visuals are designed for humans, not computers. When an LLM uses a human interface, the result is errors and inefficiencies. Because digesting information from websites will be so valuable for teaching agents, the design of new interfaces is critical. Websites optimizing for AI-friendly API interactions will have a competitive edge and first-mover advantage.

Handing over tasks to agents comes with a number of challenges. Most agents execute a series of LLM calls in order to determine which actions to take next. However, productionizing these systems presents can prove risky in terms of infrastructure and reliability. Consider this: if a top-performing model has an 87% tool-calling accuracy, the probability of successfully completing a task requiring five consecutive tool calls drops to just 49.8%. As task complexity increases, this probability plummets. An agent’s ability to accurately determine which tools to use is especially critical and still not at a production standard for many complex tasks today.

Another challenge: ensuring that agents can consistently execute longer-horizon tasks using real-world data. For extended tasks, LLM-powered agents must “remember” relevant information over long periods, accurately recalling past actions and outcomes. Despite some LLMs accepting extremely long inputs, their performance on long-context tasks is currently mixed, with most evaluations relying on synthetic data. New techniques for grounding agents on real-world, accurate, up-to-date information will be critical moving forward.

New model architectures

Today, most startup activity and venture capital interest is built around scaling and improving transformer-based models. Despite their remarkable capabilities, LLMs aren’t well suited for every problem. We believe that new model architectures have the potential to be equally (or more) performant, especially for specialized tasks, while also being less computationally intensive, exhibit lower latency, and more controllable.

We believe that new model architectures have the potential to be equally (or more) performant, especially for specialized tasks, while also being less computationally intensive, exhibit lower latency, and more controllable.

Some promising model architectures include:

- State-space models: Where LLMs are brilliant with words, they’re not brilliant for processing more data-intensive items like audio clips or videos. Cartesia is developing state-space models to be used for (among other things) instantly cloning voices and generating high-quality speech. Cartesia’s models use the Mamba architecture, which represents a data sequence without requiring explicit knowledge of all the data that came before—meaning they are more efficient than LLMs for handling long sequences.

- Large graphical models: Our portfolio company Ikigai is developing large graphical models, which use a graph-based system to visually represent relationships between different variables. LGMs are adept at handling structured information, especially time-series data, which makes them particularly relevant for forecasting a host of business related information including demand, inventory, costs, and labor requirements.

- RWKV: These models take a hybrid approach, combining the parallelizable training of transformers with the linear scaling in memory and compute requirements of recurrent neural networks (RNNs). This allows them to achieve comparable performance to transformers on language modeling tasks while being markedly more efficient during inference.

We are at a unique time in history. Every layer in the AI stack is improving exponentially, with no signs of a slowdown in sight. As a result, many founders feel that they are building on quicksand. On the flip side, this flywheel also presents a generational opportunity. Founders who focus on large and enduring problems have the opportunity to craft solutions so revolutionary that they border on magic.

If you are building magic, please email [email protected] and [email protected].

Published on 06.27.2024

Written by Foundation Capital