Cerebras lands on the TIME100 Most Influential Companies list

06.04.2024 | By: Steve Vassallo

Andrew Feldman explains an extremely difficult problem in relatively simple terms. He’s the Co-Founder and CEO of Cerebras, which develops specialized hardware and software systems to advance AI workloads.

“Build really big chips,” he said at Cerebras’s AI Day. “Except nobody’s ever done it before.”

I first met Andrew in 2009 as he was raising money for his previous company, SeaMicro. We didn’t invest in the company but I knew I wanted to invest in Andrew, whatever he’d do next. So we stayed close and would talk frequently, and when SeaMicro got acquired by AMD, we set a proverbial egg timer, knowing he’d pop out at some point. When he did, we spent the next two years riffing on a half dozen ideas until he converged on building systems for AI workloads. Within those two years, AI workloads had a full-on vertical growth trajectory, outstripping Moore’s Law by a factor of more than 25,000x.

We moved from a world where software was running on a single system to now needing supercomputer performance. But AI developers have struggled to train large models on graphics processing units (GPUs), which were built for a different purpose—just because a piano can float doesn’t make it a good life raft. The models are so big that AI devs are forced to train models on hundreds or thousands of GPUs which requires a lot of coordination and optimization. AI, in a way, has become a distributed computing problem.

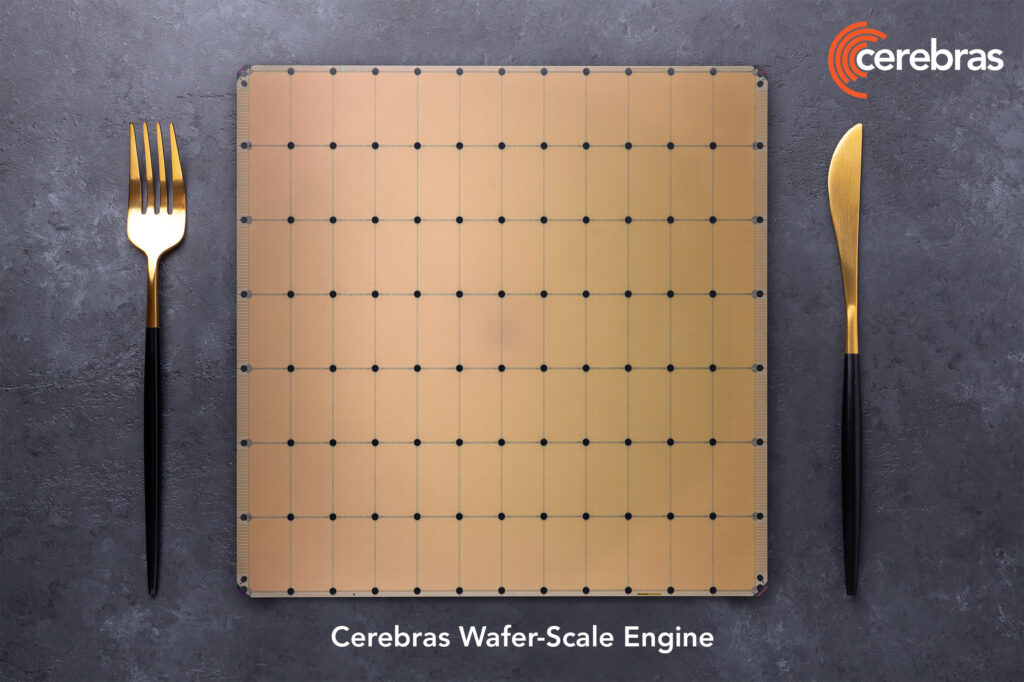

Cerebras builds chips that are big enough to hold the entire problem: its wafer scale processors (the WSE-3, the fastest AI processor on Earth), its AI infrastructure (the CS-3, which delivers cluster-scale performance on a single chip) and its supercomputers (the Condor Galaxy, which has 16 exaFLOPs of AI compute). These products work together to advance the frontier of computing.

Foundation Capital is proud to have been an early investor in Cerebras. And today, Cerebras was named to the TIME100 Most Influential Companies list. I spoke with Andrew to discuss some background on starting the company and exciting applications of its technology.

Steve Vassallo: Looking at AI workloads back in 2015, most people would’ve said it was a king’s game—for Google, Facebook, Amazon, maybe Microsoft. At this time, you were contemplating the idea for Cerebras because clearly you saw something different happening.

Andrew Feldman: We were doing some of that thinking together in your office, actually. As computer architects, we look at the world as problems that we may or may not be able to solve. We see the rise of a new workload and ask: can we build a better machine that’s really good at that work? And if it’s sufficiently better at that work, should we make the processor happen? Is the market going to be big enough such that you can have a company that’s good at that thing?

When we saw the sort of inkling of a rise of a new workload in AI in late 2015, we asked ourselves these questions. We also asked ourselves why we might expect a graphics processing unit (GPU)—a machine that had been built to push pixels to a monitor, and was very good at that—why it might also be good at this AI problem. We came to the conclusion that we could in fact build a better machine.

SV: That is a massive undertaking, but one that, given your background, you might’ve had the stomach for.

AF: We weren’t afraid. I’m a professional David in the battle with Goliath. Cerebras is my fifth startup, three of which have been acquired and one went public. Each time we’d been acquired, I redoubled in my belief that startups can beat big companies in technical transitions. So we came to believe that we could do it. We could build a better platform. And the recently announced Cerebras Wafer-Scale Engine 3 (WSE-3) is an order of 100x faster than the fastest NVIDIA GPU.

SV: What are some of the most interesting applications you’re seeing built on top of Cerebras’s technology?

AF: I think AI is made of three things: compute, a model, and data—and then it’s pointed at a problem. We built the compute, and we built so much compute that we became experts at the model running. That means our customers are going to have interesting data and they’re interested in using that data to solve a particular problem.

One of our customers is Mayo Clinic. They’ve got the largest amount of medical data in the world, and we’re training models that identify better drugs for rheumatoid arthritis. Another is Argonne National Laboratory. In a generative AI model, we were able to take early genetics of COVID-19 and predict mutations. When you decipher the genetic patterns of these variants, you’re able to more quickly identify the variants of concern and implement timely public health interventions.

SV: In addition to those customers, you’ve also partnered with the National Laboratories to accelerate molecular dynamic simulations, helping scientists better see into the future. You work with GlaxoSmithKline to assess data and speed up drug discovery. The medical applications are so exciting. But on the technology side, you’ve also just broke ground on a huge project with G42, the Abu Dhabi-based technology holding group.

AF: That’s right. We’ve partnered with G42 to build and operate the largest AI supercomputing clouds. As a bit of context, the US Government funded Oak Ridge National Labs to put up a 2 exaFLOP supercomputer. Our Condor Galaxy 1 and Condor Galaxy 2 deliver a combined 8 exaFLOPS of AI supercomputing performance. Our newest addition, the Condor Galaxy 3, will itself deliver 8 exaFLOPs, bringing the total to 16 exaFLOPs across the Condor Galaxy network.

SV: An exaFLOP delivers one quintillion floating point operations per second, the highest level of attainable computing power. It’s a huge milestone in supercomputing. But many sights are set on the next horizon: the zettaflop, which exists only in theory at this point.

Over the last six months, we’ve built two 4 exaFLOP supercomputers for a single customer, and we’ve just started an 8 exaFLOP supercomputer for them. In the remainder of the year we’ll put up another 30 or 40 more exaFLOPs for this computer. Over 18 months, we’re going to end up with somewhere between 185 and 200 exaFLOPs—which is 20% of a zettaflop.

SV: Thanks for taking the time here, Andrew. And congrats again Cerebras’s inclusion on the Time100 Most Influential Companies list. Will making this list finally impress your mom?

AF: You joke, but she still introduces me as “Andrew, the only one of my sons who isn’t a doctor.” So…maybe.

Published on 01.31.2023

Written by Foundation Capital